Vaccine Order and Reefer Optimization component

Overview

This service is exposing a send order and optimize API to build a shipment plan for all the vaccine lots given a new order. The implementation use CPLEX and an event driven solution to get a continuous streams of information coming frm the manufacturing, the refrigerator inventory and the available transportation capacity and cost.

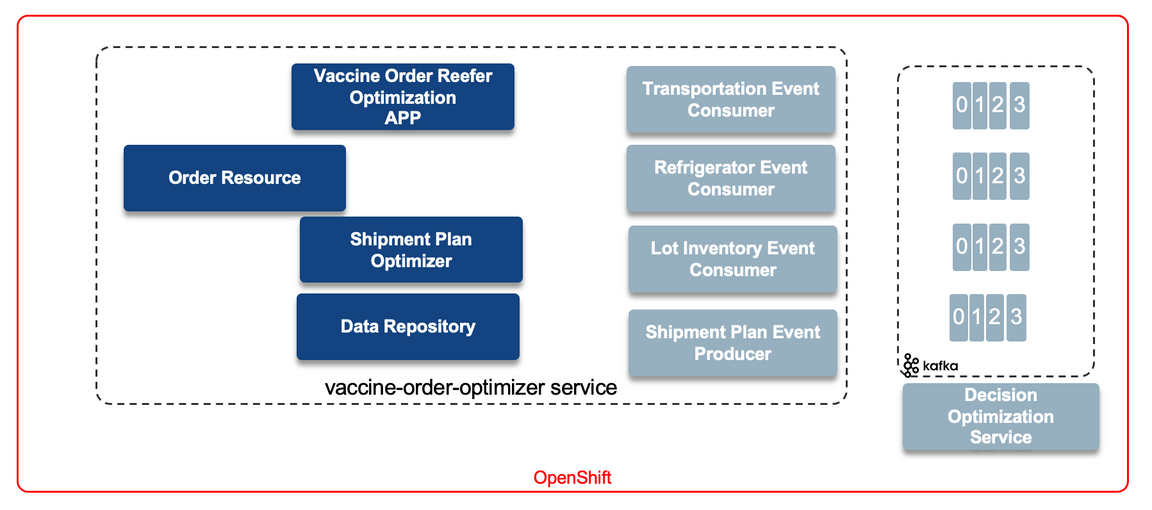

The following diagram illustrates all the components working together to support and event-driven shipment plan real time optimization:

- The app is done in python with Flask and Gunicorn WSGI server to run in a container deployable on Openshift. The Dockerfile is here

- A set of Resource classes expose REST API for orders and optimization. For demonstration purpose other resoources are implemented.

- The optimization component transforms the data to pandas structure for CPLEX to run. As the domain was very limited, it can run internally to the python app, but a remote client is also implemented to access the decision optimization service when the problem is becoming more complex.

- A set of Kafka consumers, using AVRO schema are used to get real time data.

See the code in this Vaccine order optimizer github repository.

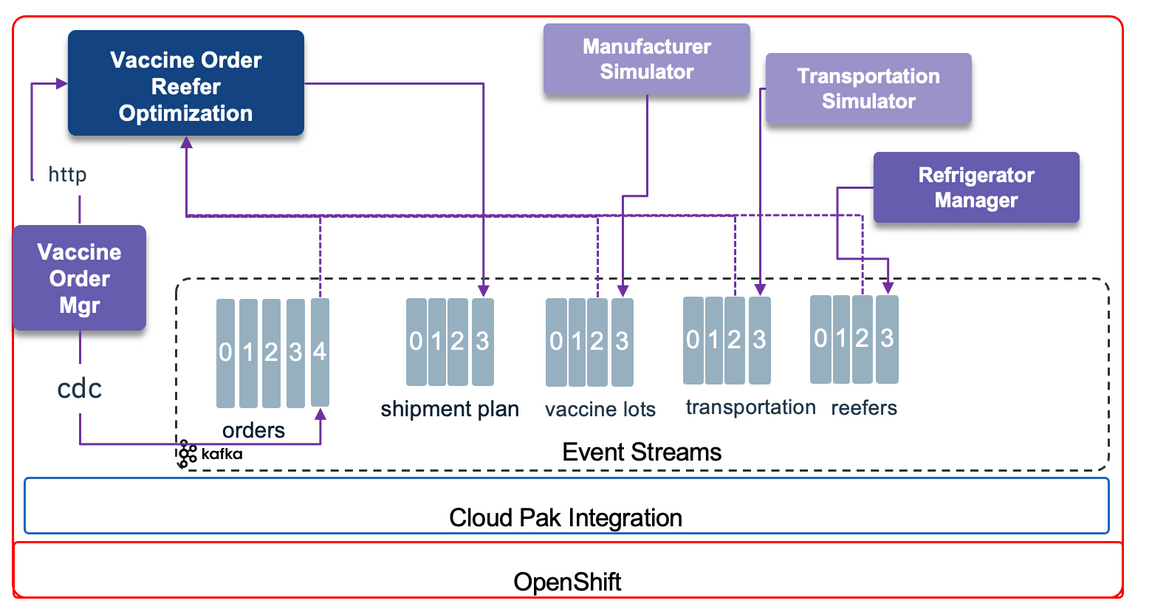

The application is deployed on OpenShift and participates with other components to demonstrate the vaccine order processing, as illustrated below:

- An external app is managing the order life cycle and can be accessible from the Vaccine Order manager git repository. 1.This Vaccine Order Reefer Optimization component.

- Manufacturer simulator to be send a vaccine lot inventory to the

vaccine-inventoryKafka topic. - Refrigerator manager to manage the availability of refrigerator container to carry the vaccine lots

- Transportation simulator to send updated itinerary update.

Build

Simply build the docker image and push it to your registry. Here is an example of commands:

docker build -t ibmcase/vaccine-order-optimizer .docker push ibmcase/vaccine-order-optimizer

The repository include a github action workflow to build and push the image automatically to the public docker registry.

The flow uses a set of secrets in the git repo:

- DOCKER_IMAGE_NAME = vaccine-order-optimizer

- DOCKER_REPOSITORY = ibmcase

- DOCKER_USERNAME and DOCKER_PASSWORD

Run locally

It is possible to run the code on your laptop or server but connected to Event Streams deployed on OpenShift. Some pre-requisites need to be done:

- Get the Kafka URL, schema registry URL, the user and password and any pem file containing the server certificate.

- The certificate needs to be under certs folder.

- Copy the script/setenv-tmpl.sh to script/setenv.sh

- modify the environment variables.

source ./script/setenv.shdocker run -ti -e KAFKA_BROKERS=$KAFKA_BROKERS -e SCHEMA_REGISTRY_URL=$SCHEMA_REGISTRY_URL -e REEFER_TOPIC=$REEFER_TOPIC -e INVENTORY_TOPIC=$INVENTORY_TOPIC -e TRANSPORTATION_TOPIC=$TRANSPORTATION_TOPIC -e KAFKA_USER=$KAFKA_USER -e KAFKA_PASSWORD=$KAFKA_PASSWORD -e KAFKA_CERT=$KAFKA_CERT -p 5000:5000 ibmcase/vaccine-order-optimizer

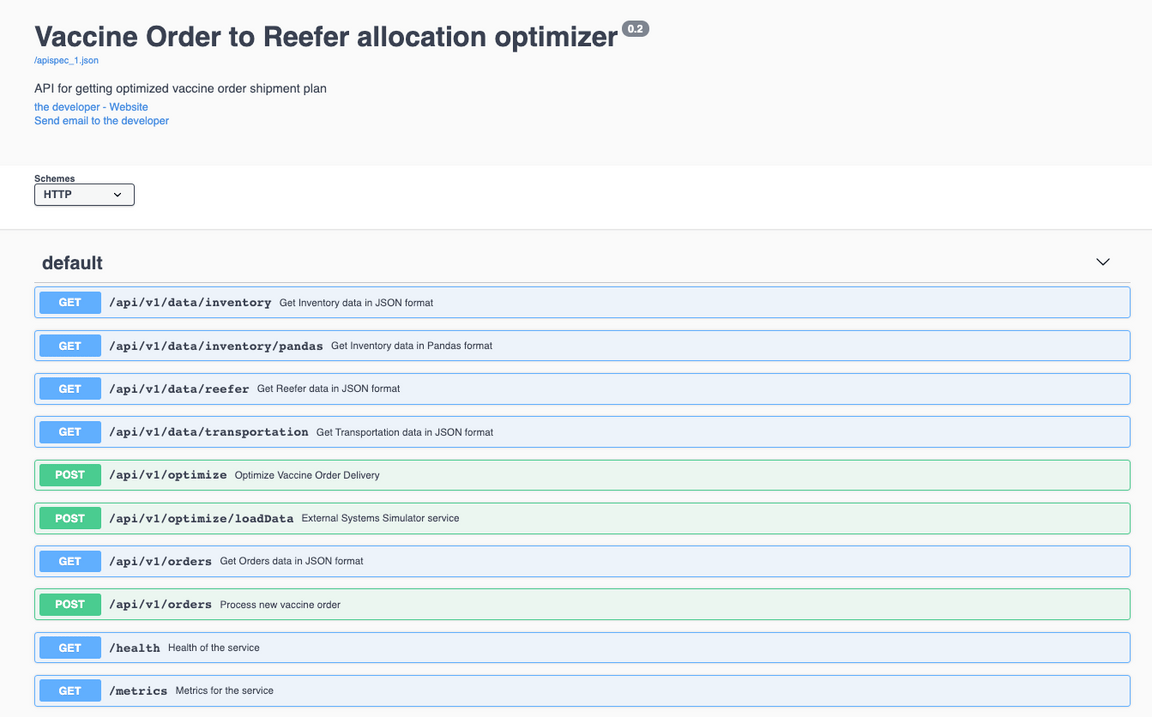

The swagger looks like:

Deploy to OpenShift

Connect to the vaccine project using:

oc project vaccineModify the kubernetes/configmap.yaml with the Kafka Broker URL you are using, and if you changed the topic names too. Then do:

oc apply -f kubernetes/configmap.yamlGet pem certificate from eventstreams or the Kafka cluster project to the local vaccine project with a command like:

oc get secret light-es-cluster-cert-pem -n eventstreams --export -o yaml | oc apply -f -This pem file is mounted to the pod via the secret as:

volumeMounts:- mountPath: /certsname: eventstreams-cert-pemvolumes:- name: eventstreams-cert-pemsecret:secretName: light-es-cluster-cert-pemand the path for the python code to access this pem file is defined in the environment variable:

- name: KAFKA_CERTvalue: /certs/es-cert.pemthe name of the file is equal to the name of the {.data.es-cert.pem} field in the secret.

Name: eventstreams-cert-pemNamespace: vaccineLabels: <none>Annotations: <none>Type: OpaqueData====Copy the Kafka user’s secret from the

eventstreamsor Kafka project to the current vaccine project. This secret has two data fields: username and passwordoc get secret app-user-tls -n eventstreams --export -o yaml | oc apply -f -

They are used in the Deployment configuration as:

- name: KAFKA_USERvalueFrom:secretKeyRef:key: usernamename: app-user-tls- name: KAFKA_PASSWORDvalueFrom:secretKeyRef:key: password

- Deploy the application using:

oc apply -f kubernetes/app-deployment.yaml

Usage Details

This section addresses how to validate the app is running.

Once the application is deployed on OpenShift get the routes to the external exposed URL:

oc describe routes vaccine-order-optimizerAccess the swagger. As an example of URL: http://vaccine-order-optimizer-vaccine…cloud/apidocs/

Trigger the data loading with the operation:

curl -X POST http://vaccine-order-optimizer-vaccine......cloud/api/v1/optimize/loadData "Send a new order

curl -X POST -H "Content-Type: application/json" http://vaccine-order-optimizer-vaccine......cloud/ --data "@./data/order1.json"