Required Services

Development Tools

You will require the following tools locally on your development system:

Clone all the repositories

Start by cloning the root repository using the command:

git clone https://github.com/ibm-cloud-architecture/refarch-kc/

Then go to the refarch-kc folder and use the command:

./script/clone.sh

to get all the solution repositories. You should have at least the following repositories:

refarch-kc-container-msrefarch-kc-order-msrefarch-kc-uirefarch-kcrefarch-kc-msrefarch-kc-streams

Then modify the environment variables according to your environment you are using. This file is used by a lot of scripts in the solution to set the target deployment environment: LOCAL, IBMCLOUD, ICP, MINIKUBE.

Apache Kafka

There are multiple options to deploy an Apache Kafka-based cluster to support this reference implementation:

- IBM Event Streams on IBM Cloud

- IBM Event Streams on RedHat OpenShift Container Platform

- Apache Kafka via Strimzi Operator

IBM Event Streams on IBM Cloud

Service Deployment

We recommend to follow our most recent lab on how to provision an Event Streams intance on cloud.

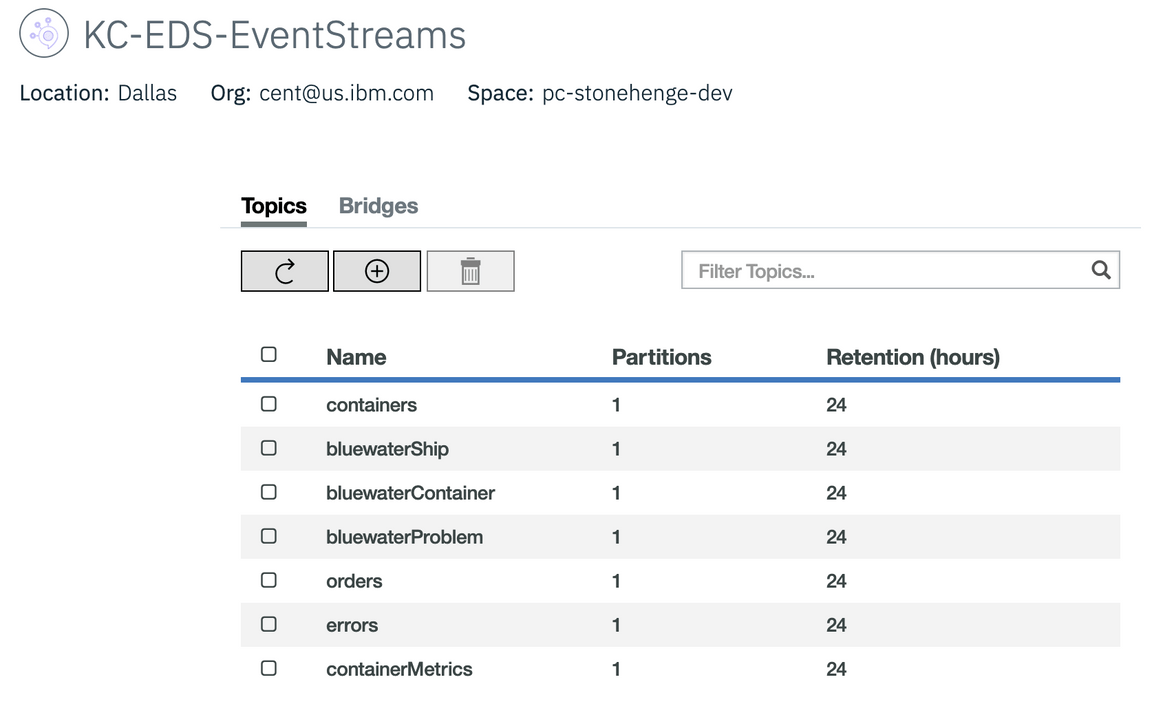

In the Manage panel add the topics needed for the solution. We need at least the following:

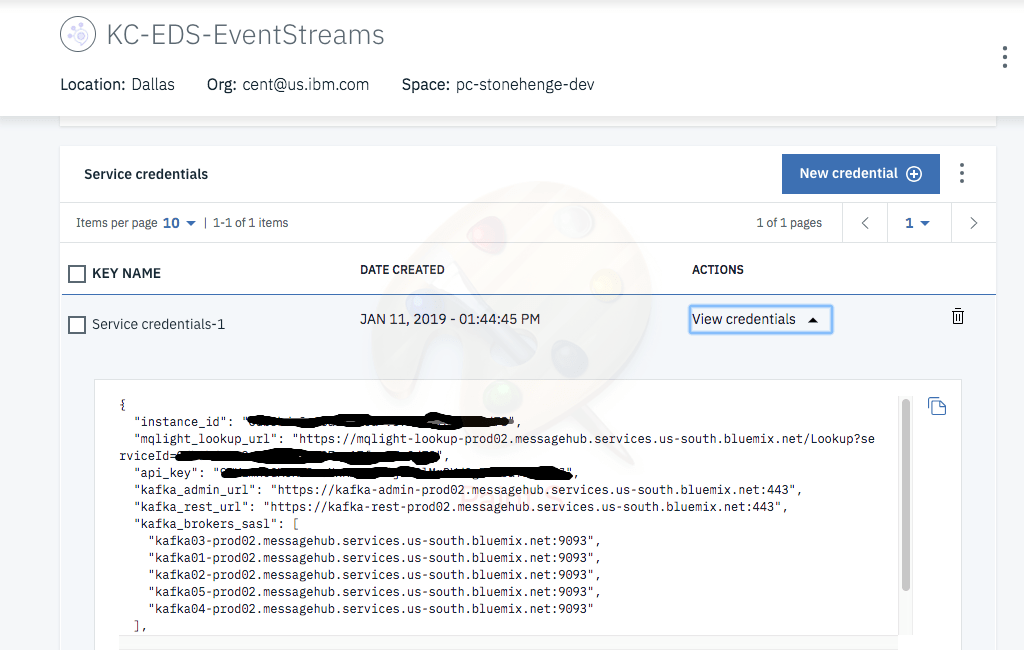

In the Service Credentials tab, create new credentials to get the Kafka broker list, the admim URL and the api_key needed to authenticate the consumers or producers.

Kafka Brokers

Regardless of specific deployment targets (OCP, IKS, k8s), the following prerequisite Kubernetes ConfigMap needs to be created to support the deployments of the application’s microservices. These artifacts need to be created once per unique deployment of the entire application and can be shared between application components in the same overall application deployment. These values can be acquired from the kafka_brokers_sasl section of the service instance’s Service Credentials.

kubectl create configmap kafka-brokers --from-literal=brokers='<replace with comma-separated list of brokers>' -n <target k8s namespace / ocp project>kubectl describe configmap kafka-brokers -n <target k8s namespace / ocp project>

Event Streams User Credentials

The Event Streams User Credentials are needed in order for any deployed consumers or producers to work with the IBM Event Streams service in IBM Cloud. To avoid sharing security keys, create a Kubernetes Secret in the target cluster you will deploy the application microservices to. This is available from the Service Credentials information you just created above.

kubectl create secret generic eventstreams-cred --from-literal=username='token' --from-literal=password='<replace with api key>' -n <target k8s namespace / ocp project>kubectl describe secret eventstreams-cred -n <target k8s namespace / ocp project>

IBM Event Streams on RedHat OpenShift Container Platform

Service Deployment

The installation is documented in the product documentation and in our own note here.

Kafka Brokers

Regardless of specific deployment targets (OCP, IKS, k8s), the following prerequisite Kubernetes ConfigMap needs to be created to support the deployments of the application’s microservices. These artifacts need to be created once per unique deployment of the entire application and can be shared between application components in the same overall application deployment.

kubectl create configmap kafka-brokers --from-literal=brokers='<replace with comma-separated list of brokers>' -n <target k8s namespace / ocp project>kubectl describe configmap kafka-brokers -n <target k8s namespace / ocp project>

Event Streams User Credentials

The Event Streams Scram User Credentials are needed in order for any deployed consumers or producers to work with the IBM Event Streams instance running in your cluster. These SCRAM credentials are associated to the KafkaUser object that is being created behind the scenes. In order to create that KafkaUser object and obtain the SCRAM credentials for it, follow the instructions at https://ibm.github.io/event-streams/security/managing-access/#creating-a-kafkauser-in-the-ibm-event-streams-ui

To avoid sharing security keys, create a Kubernetes Secret in the target cluster you will deploy the application microservices to:

kubectl create secret generic eventstreams-cred --from-literal=username='<replace with scram username>' --from-literal=password='<replace with scram password>' -n <target k8s namespace / ocp project>kubectl describe secrets -n <target k8s namespace / ocp project>

IMPORTANT: Our reference application uses idempotent producers which our KafkaUser needs a special set of permissions for. In, order to add these, edit the KafkaUser created when you generatted the SCRAM credentials following the link above in this section and add the following set of permissions in the acls list:

- host: '*'operation: IdempotentWriteresource:name: '*'patternType: literaltype: cluster

Event Streams Certificates

If you are using Event Streams as your Kafka broker provider and it is deployed via the IBM Cloud Pak for Integration (ICP4I), you will need to create an additional Secret to store the generated Certificates & Truststores to connect securely between your application components and the Kafka brokers. These artifacts need to be created once per unique deployment of the entire application and can be shared between application components in the same overall application deployment.

From the Connect to this cluster tab on the landing page of your Event Streams installation, download both the PKCS12 and the PEM certificates. After you have downloaded these, we are going to make them available to our producer and consumers by storing them in Kubernetes Secrets:

oc create secret generic eventstreams-truststore --from-file=<path to downloaded es-cert.p12>oc create secret generic eventstreams-cert-pem --from-file=<path to downloaded es-cert.pem>

Apache Kafka via Strimzi Operator

If you simply want to deploy Kafka using the open source, community-supported Helm Charts, you can do so with the following commands.

Postgresql

The Container Manager microservice persists the Reefer Container inventory in a Postgresql database. The deployment of Postgresql is only necessary to support the deployment of the Container Manager microservice. If you are not deploying the Container Manager microservice, you do not need to deploy and configure a Postgresql service and database.

The options to support the Container Manager microservice with a Postgresql database are:

Postgresql on IBM Cloud

Service Deployment

To install the service, follow the product documentation here.

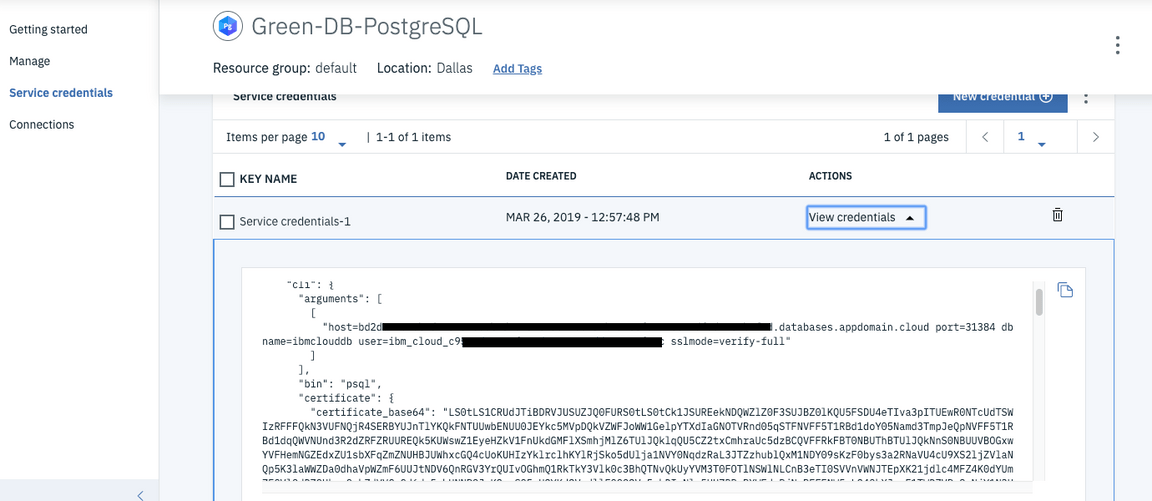

Once the service is deployed, you need to create some service credentials and retreive the following values for the different configurations:

postgres.usernamepostgres.passwordpostgres.composed, which will need to be mapped to a JDBC URL in the format ofjdbc:postgresql://<hostname>:<port>/<database-name>?sslmode=verify-full&sslfactory=org.postgresql.ssl.NonValidatingFactory(this will remove theusernameandpasswordvalues from the defaultcomposedstring)

Creating Postgresql credentials as Kubernetes Secrets

- Applying the same approach as above, copy the Postgresql URL as defined in the Postegresql service credential and execute the following command:

kubectl create secret generic postgresql-url --from-literal=binding='<replace with postgresql-url>' -n <target k8s namespace / ocp project>

- For the user:

kubectl create secret generic postgresql-user --from-literal=binding='ibm_cloud_c...' -n <target k8s namespace / ocp project>

- For the user password:

kubectl create secret generic postgresql-pwd --from-literal=binding='<password from the service credential>.' -n <target k8s namespace / ocp project>

- When running Postgresql through the IBM Cloud service, additional SSL certificates are required to communicate securely:

- Install the IBM Cloud Database CLI Plugin:ibmcloud plugin install cloud-databases

- Get the certificate using the name of the postgresql service:

ibmcloud cdb deployment-cacert $IC_POSTGRES_SERV > postgresql.crt- Then add it into an environment variable

export POSTGRESQL_CA_PEM="$(cat ./postgresql.crt)"- Then define a secret:

kubectl create secret generic postgresql-ca-pem --from-literal=binding="$POSTGRESQL_CA_PEM" -n browncompute - Install the IBM Cloud Database CLI Plugin:

Community-based Postgresql Helm charts

If you simply want to deploy Postgresql using the open source, community-supported Helm Charts, you can do so with the following commands.

Environment Considerations

Reference Application Components Pre-reqs for details on creating the necessary ServiceAccount with required permissions, prior to deployment.

Service Deployment

- Add Bitnami Helm Repository:

helm repo add bitnami https://charts.bitnami.com/bitnami

- Update the Helm repository:

helm repo update

- Create a Kubernetes Namespace or OpenShift Project (if not already created).

kubectl create namespace <target namespace>

- Deploy Postgresql using the

bitnami/postgresqlHelm Chart:

mkdir bitnamimkdir templateshelm fetch --untar --untardir bitnami bitnami/postgresqlhelm template --name postgre-db --set postgresqlPassword=supersecret \--set persistence.enabled=false --set serviceAccount.enabled=true \--set serviceAccount.name=<existing service account> bitnami/postgresql \--namespace <target namespace> --output-dir templateskubectl apply -f templates/postgresql/templates

It will take a few minutes to get the pods ready.

Creating Postgresql credentials as Kubernetes Secrets

The

postgresql-urlneeds to point to the in-cluster (non-headless) Kubernetes Service created as part of the deployment and should take the form of the deployment name with the suffix of-postgresql:kubectl get services | grep postgresql | grep -v headlesskubectl create secret generic postgresql-url --from-literal=binding='jdbc:postgresql://<helm-release-name>-postgresql' -n <target k8s namespace / ocp project>For the user:

kubectl create secret generic postgresql-user --from-literal=binding='postgres' -n <target k8s namespace / ocp project>For the user password:

kubectl create secret generic postgresql-pwd --from-literal=binding='<password used in the helm template command>.' -n <target k8s namespace / ocp project>

Service Debugging & Troubleshooting

Access to the in-container password can be made using the following command. This should be the same value you passed in when you deployed the service.

export POSTGRES_PASSWORD=$(kubectl get secret --namespace <target namespace> postgre-db-postgresql -o jsonpath="{.data.postgresql-password}" | base64 --decode)

And then use the psql command line interface to interact with postgresql. For that, we use a Docker image as a client to the Postgresql server:

kubectl run postgre-db-postgresql-client --rm --tty -i --restart='Never' --namespace <target namespace> --image bitnami/postgresql:11.3.0-debian-9-r38 --env="PGPASSWORD=$POSTGRES_PASSWORD" --command -- psql --host postgre-db-postgresql -U postgres -p 5432

To connect to your database from outside the cluster execute the following commands:

kubectl port-forward --namespace <target namespace> svc/postgre-db-postgresql 5432:5432 &&\PGPASSWORD="$POSTGRES_PASSWORD" psql --host 127.0.0.1 -U postgres -p 5432

BPM

The containers microservice component of this Reefer Container EDA reference application can be integrated with a BPM process for the the maintenance of the containers. This BPM process will dispatch a field engineer so that the engineer can go to the reefer container to fix it. The process of scheduling an engineer and then completing the work can best be facilitated through a process based, structured workflow. We will be using IBM BPM on Cloud or Cloud Pak for Automation to best demonstrate the workflow. This workflow can be explored in detail here.

In order for the containers microservice to fire the BPM workflow, we need to provide the following information through Kubernetes configMaps and secrets:

Provide in a configMap:

- the BPM authentication login endpoint

- the BPM workflow endpoint

- the BPM anomaly event threshold

- the BPM authentication token time expiration

cat <<EOF | kubectl apply -f -apiVersion: v1kind: ConfigMapmetadata:name: bpm-anomalydata:url: <replace with your BPM workflow endpoint>login: <replace with your BPM authentication endpoint>expiration: <replace with the number of second for the auth token to expire after>Provide your BPM instance’s credentials in a secret:

kubectl create secret generic bpm-anomaly --from-literal=user='<replace with your BPM user>' --from-literal=password='<replace with your BPM password>' -n <target k8s namespace / ocp project>kubectl describe secrets -n <target k8s namespace / ocp project>

IMPORTANT: The names for both the secret and configMap (bpm-anomaly) is the default the container microservice uses in its helm chart. Make sure the name for the configMap and secret you create match the names you used in the containers microservice’s helm chart.

If you do not have access to any BPM instance with this field engineer dispatching workflow, you can bypass the call to BPM by disabling such call in the container microservice component. For doing so, you can use the following container microservice’s API endpoints:

- Enable BPM:

http://<container_microservice_endpoint>/bpm/enable - Disable BPM:

http://<container_microservice_endpoint>/bpm/disable - BPM status:

http://<container_microservice_endpoint>/bpm/status

where <container_microservice_endpoint> is the route, ingress or nodeport service you associated to your container microservice component at deployment time.

IBM BPM on IBM Cloud

To be completed

Reference: https://www.bpm.ibmcloud.com/

IBM BPM on RedHat OpenShift Container Platform

To be completed