Installing BACA

-

Prepare your environment by following the instructions on IBM Cloud Pak for Automation 19.0.1 on Certified Kubernetes. The instructions ask you to validate that you have the right version of Kubernetes, Helm, Kubernetes CLI and OpenShift container platform CLI installed on the machine that you are using for installation. Furthermore, the containers require access to database(s) and LDAP (if using LDAP). Ensure that the version of the database and LDAP are supported for IBM Cloud Pak for Automation version that you are installing.

-

Ensure that you have downloaded PPA package for the version of IBM Business Automation Content Analyzer you are planning to install. We used ICP4A19.0.1-baca.tgz to install IBM Business Automation Content Analyzer version 19.0.1.

-

Download the loadimages.sh script from GitHub.

-

Create an OpenShift project (namespace) where you want to install IBM Business Automation Content Analyzer version 19.0.1 and make the new project (namespace) the current project. To create new project use command

oc new-project <projectname>where<projectname>is the name of the project for examplebaca. -

Login to the OpenShift Docker registry following instructions in the Load the Cloud Pak images.

-

Run the

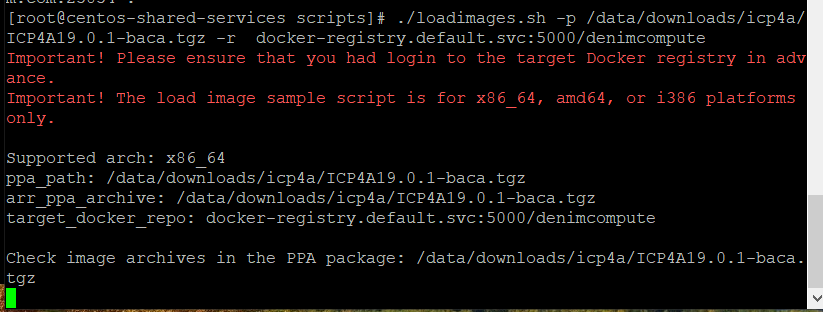

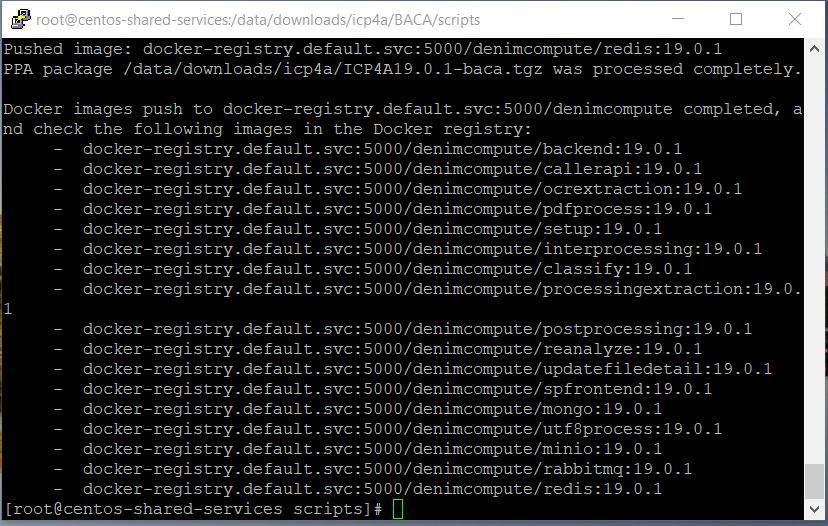

loadimages.shscript to tag and push the product container images into your Docker registry. Specify the two mandatory parameters in the command line thatloadimages.shscript requires. The namespace is the<projectname>that you specified earlier.

In the screen shot below, target docker registry is docker-registry.default.svc:5000 and namespace is denimcompute.

- IBM BACA 19.0.1 consists of 17 images and the

loadimages.shscript lists all the images that it uploads to the Docker registry on successful completion.

-

Prepare environment for IBM Business Automation Content Analyzer. See Preparing to install automation containers on Kubernetes. These procedures include setting up databases, LDAP, storage, and configuration files that are required for use and operation.

-

If you are using LDAP for authentication, BACA users need to be configured on the LDAP server. An initial user is created in IBM Business Automation Content Analyzer when first creating the Db2 database. The user name must match the LDAP user name when specified. If LDAP is not used for authentication, pre-setup of users is not required. Refer to Preparing users for IBM Business Automation Content Analyzer for more information.

-

If you do not have a DB2 database, set up a new DB2 environment. Download database scripts from this GitHub repo. Refer to Creating and Configuring a DB2 database for more information.

-

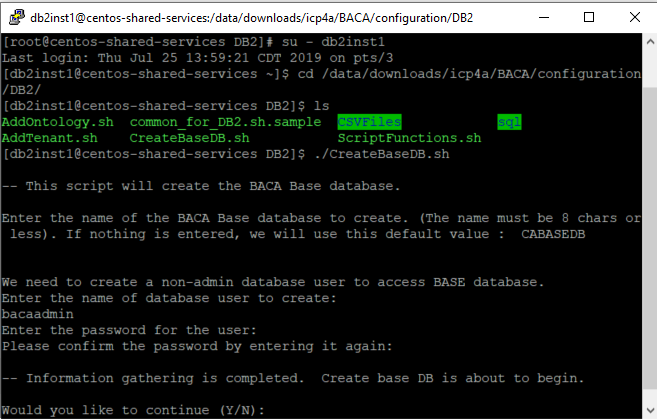

Log into DB2 instance using DB2 instance administrator id such as db2inst1 (refer the section Setup DB2.

-

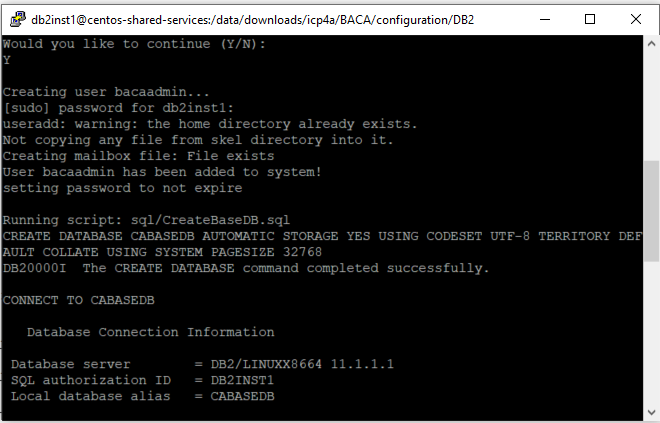

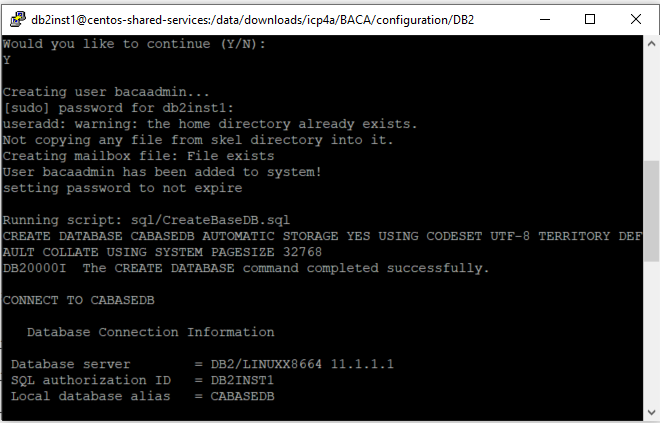

Set up the base database by running the following command:

./CreateBaseDB.sh.

Enter the following details:

- Name of the IBM Business Automation Content Analyzer Base database - (enter a unique name of 8 characters or less and no special characters).

- Name of database user - (enter a database user name) - can be a new or existing Db2 user.

- Password for the user - (enter a password) - each time when prompted. If it is an existing user, the prompt is skipped

Enter Y when you are asked Would you like to continue (Y/N):

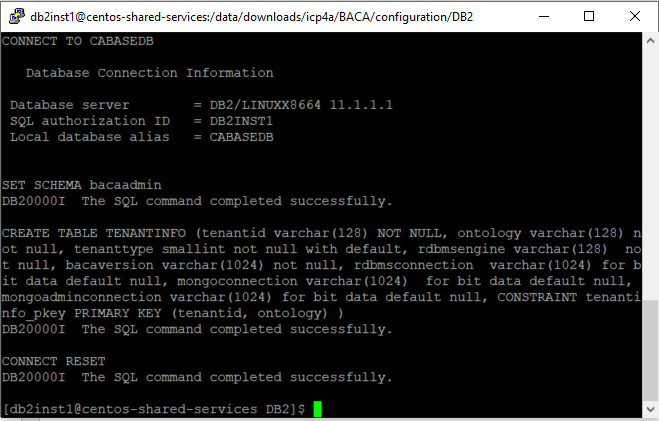

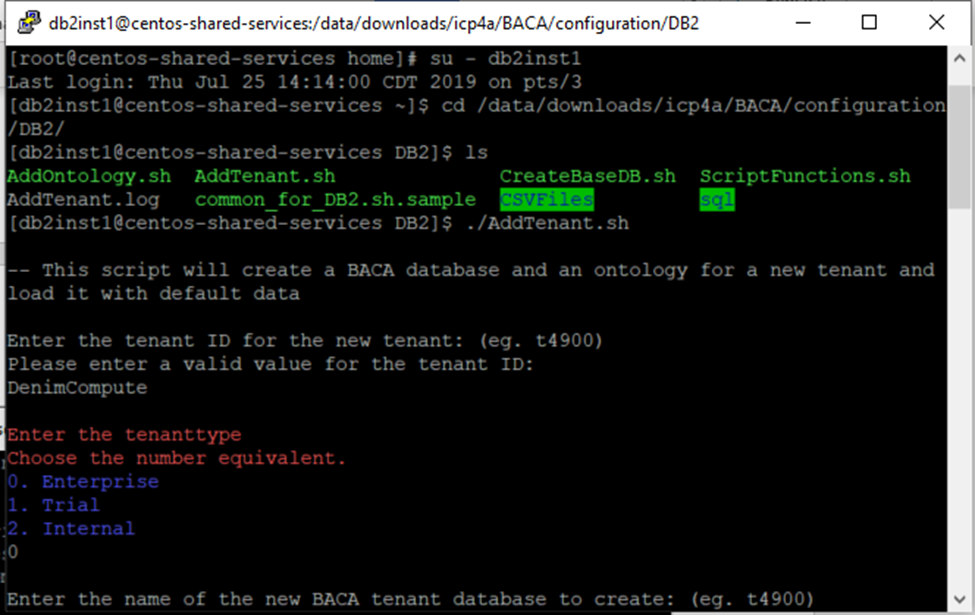

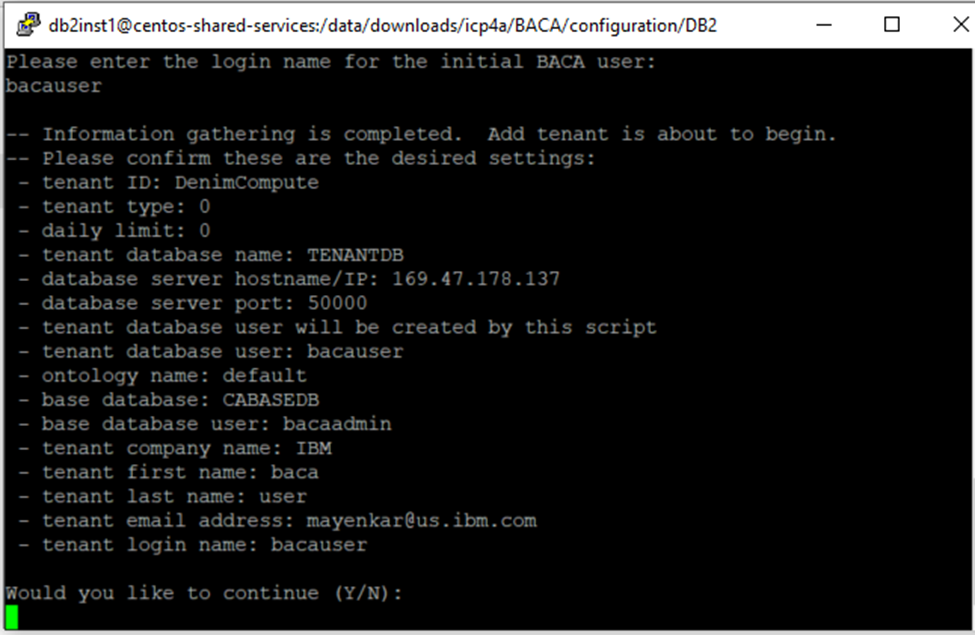

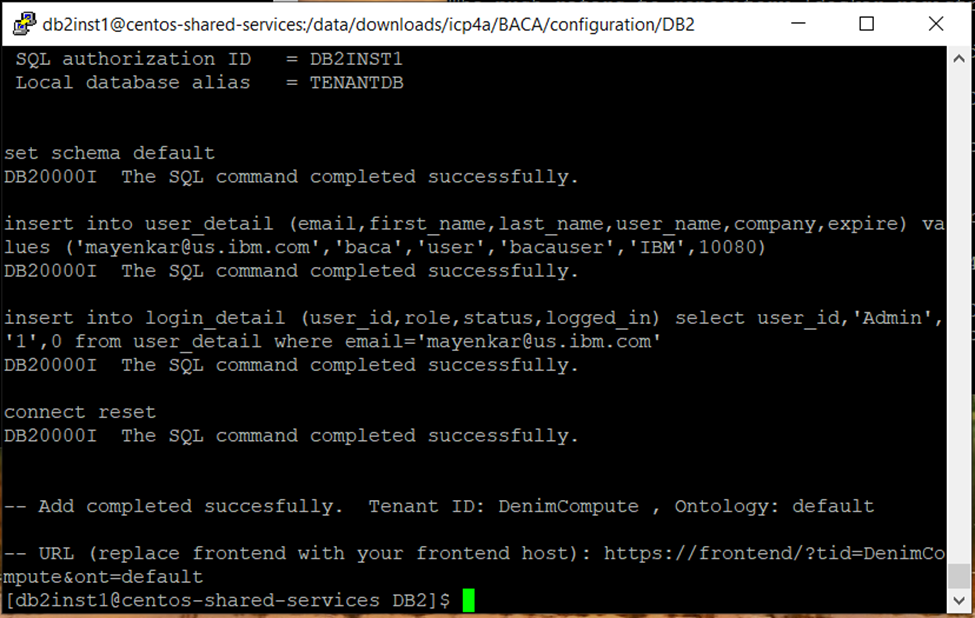

- Create the tenant database by following these instructions. Once the tenant database is created and populated with initial content, the tenant id and ontology is displayed (see screen shots below).

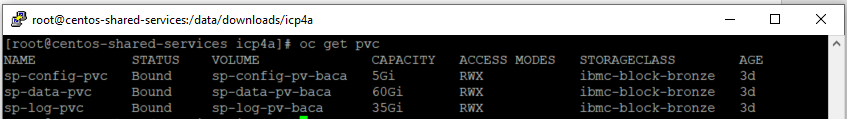

- External storage is needed in the content services environment. You set up and configure storage to prepare for the container configuration and deployment. Follow instructions at Configuring storage for the Business Automation Content Analyzer environment to create persistent volumes (PV) and persistent volume claims (PVC). The screen below shows pv and pvc created in project

<projectname>(for examplebaca) created earlier.

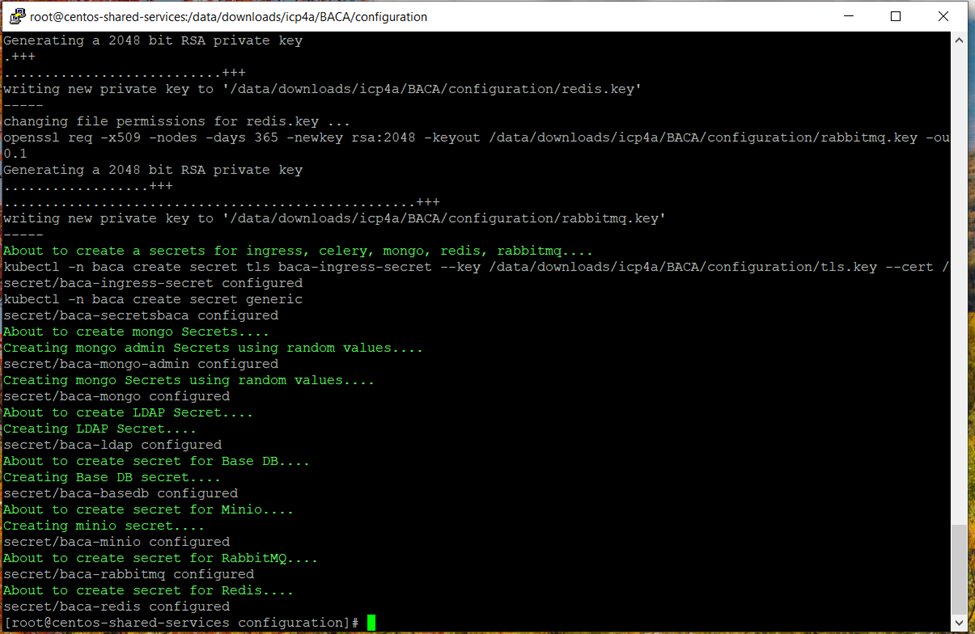

- You need to create SSL certificates and secrets before you install via Helm chart. You can run the

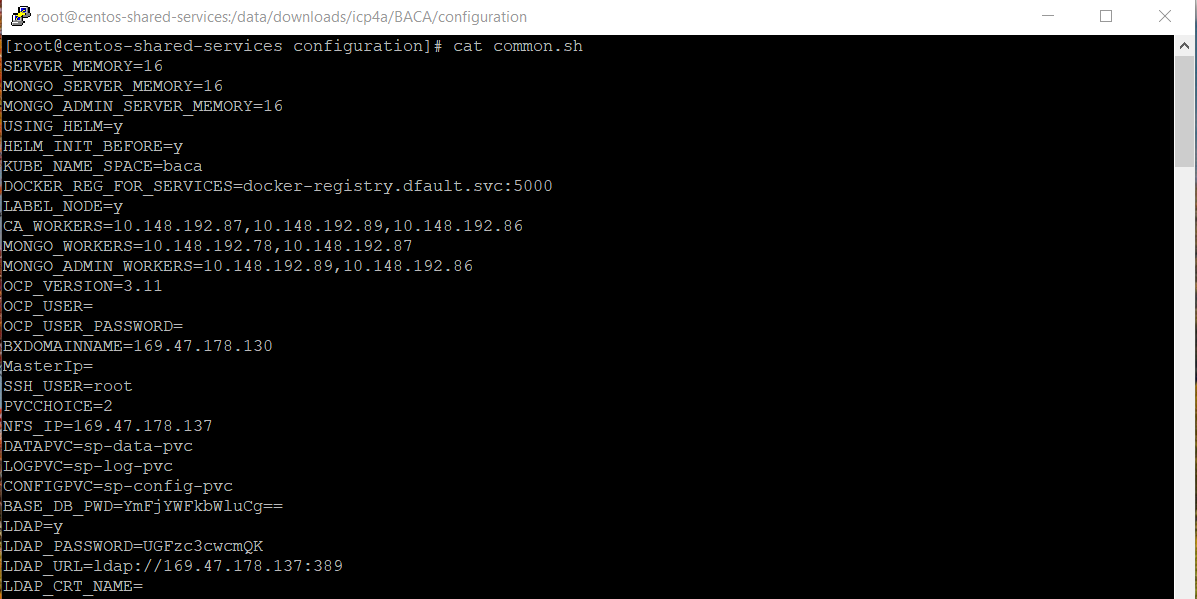

init_deployment.shscript provided here to create SSL certificates and secrets. Theinit_deployment.shscripts requires you to populate parameters in common.sh file. Use common_OCP_template.sh as basis forcommon.shto be used for installation on OpenShift. Information on populating the parameters incommon.shcan be found at Prerequisite install parameters. The screen shot below capturescommon.shthat we used in our installation process. Please note that we selectedPVCCHOICEas2as we created, persistent volumes and persistent volume claims manually in the earlier step. Furthermore, please setHELM_INIT_BEFOREasYas Helm has been installed earlier and we do not want to install Helm as part of runninginit_deployment.shscript.

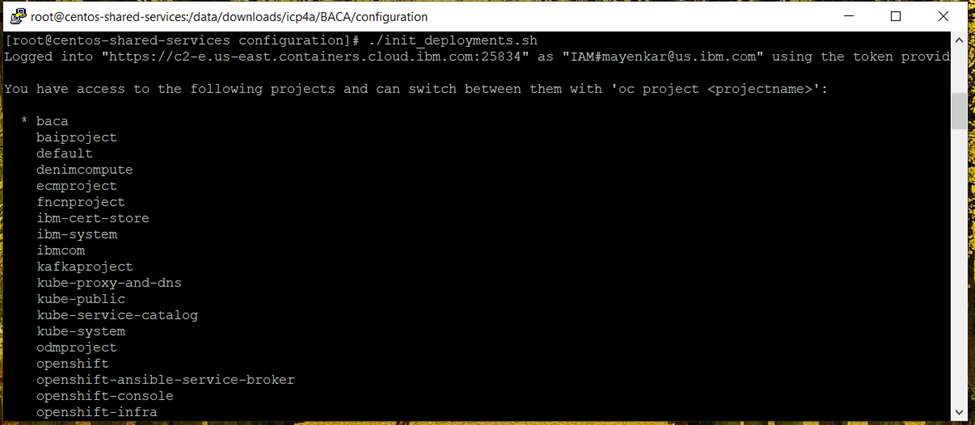

- Run

./init_deployment.shso that it creates SSL certificates and secrets required for Content Analyzer.Please note the

init_deployment.shusesloginToClusterfunction inbashfunctions.shto log into OpenShift cluster.- The

loginToClusterfunction assumes that Kubernetes API server is exposed on port8443and also requires user id and password to log into the cluster. If you are using Managed OpenShift cluster on IBM Cloud then this assumption is not valid. Thus, you will have to modify the function to use login command copied from OpenShift web console. - Copy login command is available as drop down in upper right corner of OpenShift web console and update

loginToCLusterfunction with the copied command.

- The

Please make sure that before you run the script, the OpenShift project that you created earlier is the current project. If it is not, make it a current project using the command oc project <projectname>.

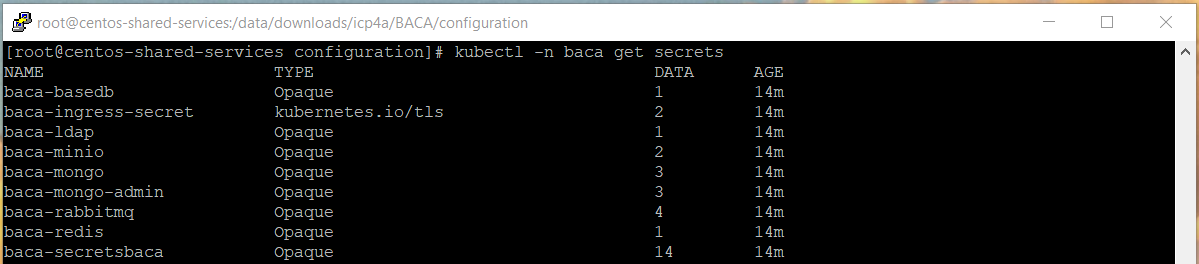

- Validate the objects created by running the following commands. To check secrets, run the following command:

kubectl -n <projectname> get secrets

or

oc get secrets

-

Verify that 9 secrets were created (7 if not using LDAP or ingress). Refer to Create PVs, PVCs, certificates and secrets using init_deployment.sh for more information.

-

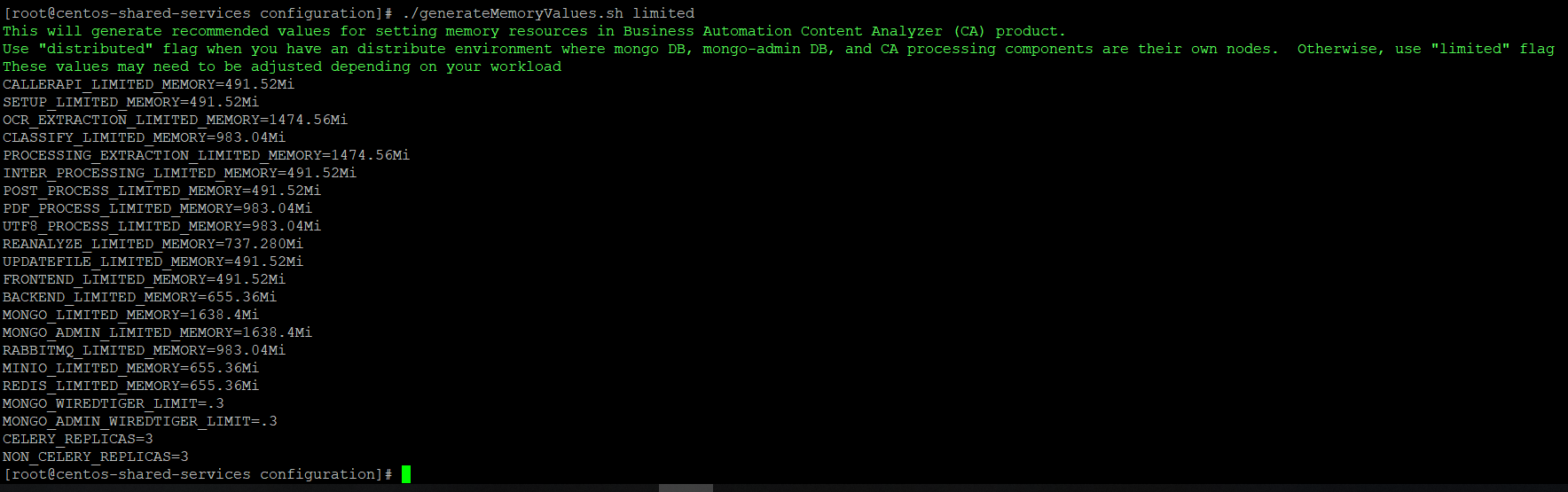

Run

./generateMemoryValues.sh <limited>or./generateMemoryValues.sh <distributed>. For smaller system (5 worker-nodes or less) where the Mongo database pods will be on the same worker node as other pods, use limited option.

-

Copy these values for replacement in the

values.yamlfile if you want to deploy CA using Helm chart or replacing these values in theca-deploy.ymlfile if you want to deploy CA using kubernetes YAML files. Refer to Limiting the amount of available memory for more information. -

Download the Helm Chart. Extract the helm chart from

ibm-dba-prod-1.0.0.tgzand copy to theibm-dbamc-baca-proddirectory. -

Edit the

values.yamlfile to populate configuration parameters. Go through the Helm Chart configuration parameters section and populatingvalues.yamlwith correct values for options with the parameters and values. Note that anything not documented here typically does not need to be changed. -

After the

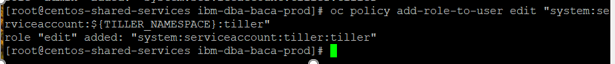

values.yamlis filled out properly, you can proceed to deploy Content Analyzer with the Helm chart. However, before you run helm, the Tiller server will need at least "edit" access to each project where it will manage applications. In the case that Tiller will be handling Charts containing Role objects, admin access will be needed. Refer to Getting started with Helm on OpenShift for more information. Run the commandoc policy add-role-to-user edit "system:serviceaccount:${TILLER_NAMESPACE}:tiller"to grant the Tiller edit access to the project.

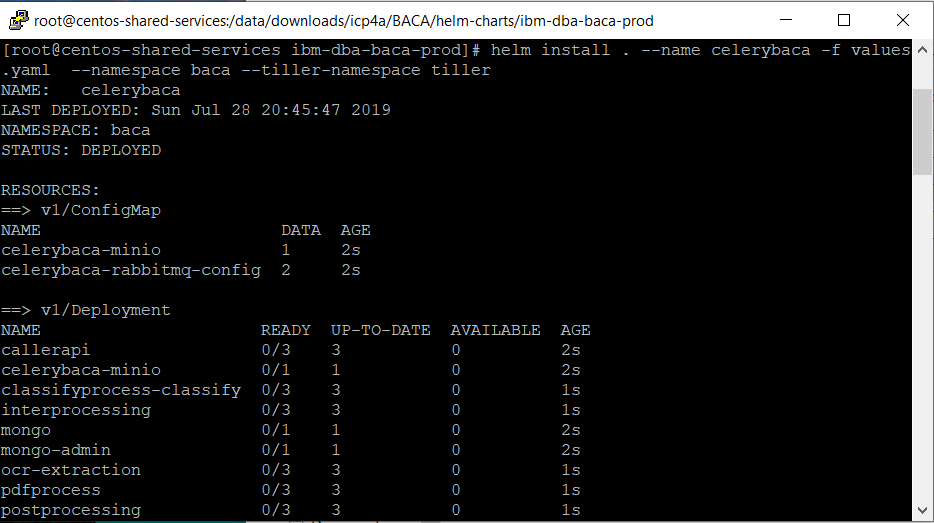

- To deploy Content Analyzer, from the

ibm-dba-baca-proddirectory run:

$ helm install . --name celery<projectname> -f values.yaml --namespace <projectname> --tiller-namespace tiller

where <projectname> is the name of the project you created earlier.

Due to the configuration of the readiness probes, after the pods start, it may take up to 10 or more minutes before the pods enter a ready state. Refer to Deploying with Helm charts for more information.

- Once all the pods are running, complete the post deployments steps listed here.