Event Streams on Cloud hands on lab

This documentation aims to be a introductory hands-on lab on IBM Event Streams on Cloud with topic creation.

Index¶

Pre-requisites¶

This lab requires the following components to work against:

- An IBM Cloud account. Get a IBM Cloud Account by using the register link in https://cloud.ibm.com/login Create a new account is free of charge.

On your development workstation you will need:

- IBM Cloud CLI (https://cloud.ibm.com/docs/cli?topic=cloud-cli-getting-started)

- IBM CLoud CLI Event Streams plugin (

ibmcloud plugin install event-streams)

Login CLI¶

Account and resource group concepts¶

As any other IBM Cloud services, Event Streams can be part of a resources group, is controlled by user roles, and is accessible via API keys. To get familiar with those concepts, it is recommended to study the concepts of IBM account and how it is related to resource group and services. The following diagram is a summary of the objects managed in IBM Cloud:

To summarize:

- Account represents the billable entity, and can have multiple users.

- Users are given access to resource groups.

- Applications are identified with a service ID.

- To restrict permissions for using specific services, you can assign specific access policies to the service ID and user ID

- Resource groups are here to organize any type of resources (services, clusters, VMs...) that are managed by Identity and Access Management (IAM).

- Resource groups are not scoped by location

- Access group are used to organize a set of users and service IDs into a single entity and easily assign permissions

Create a Event Streams service instance¶

From the IBM Cloud Dashboard page, you can create a new resource, using the right top button Create resource.

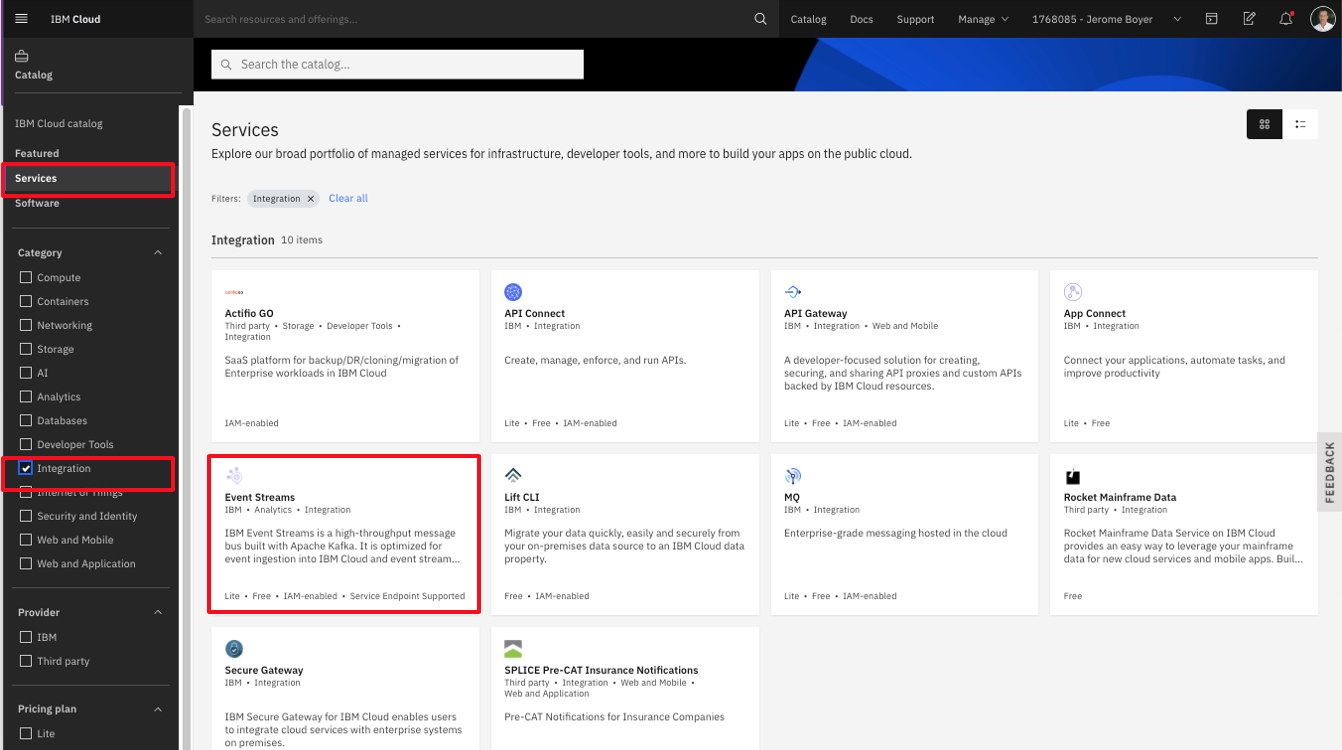

which leads to the service and feature catalog. From there in the services view, select the integration category and then the Event Streams tile:

You can access this screen from this URL: https://cloud.ibm.com/catalog/event-streams.

Plan characteristics¶

Within the first page for the Event Streams creation, you need to select the region, the pricing plan, a service name and the resource group.

For the region, it is important to note that the 'lite' plan is available only in Dallas, and it used to do some proof of concept. It is recommended to select a region close to your on-premise data center. For the Plan description, the product documentation goes over the different plans in details.

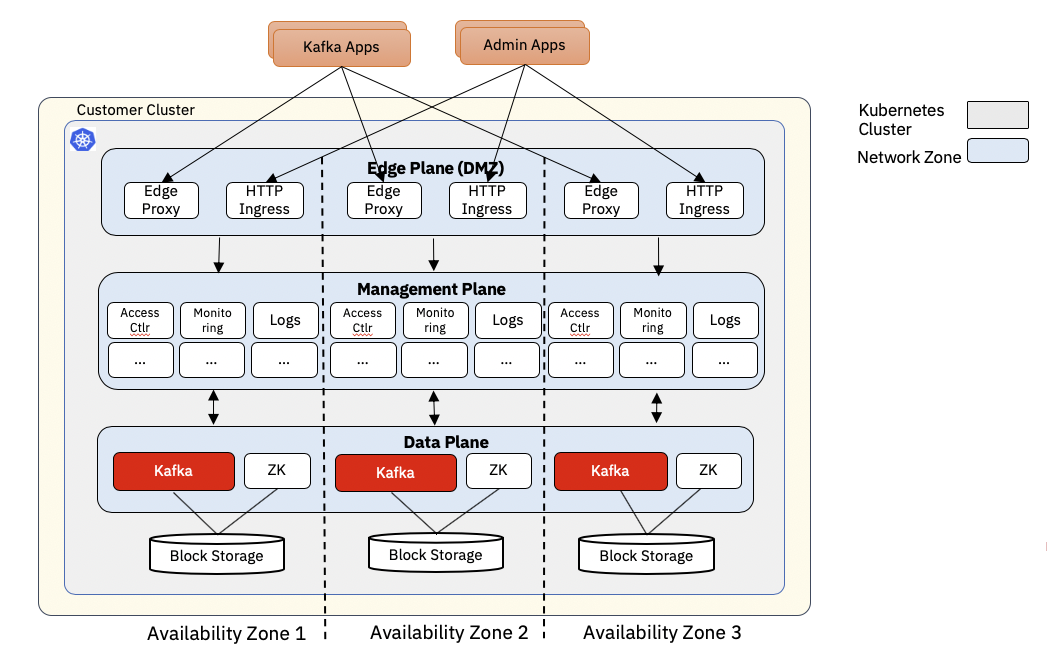

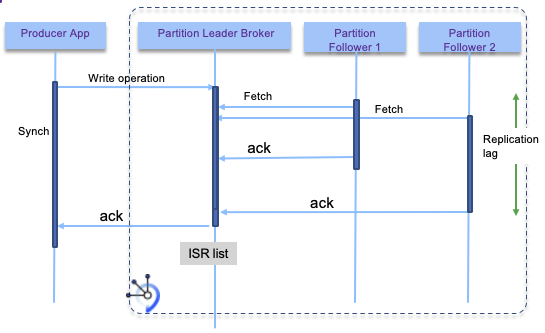

The 'multi-tenancy' means the Kafka cluster is shared with other people. The cluster topology is covering multi availability zones inside the same data center. The following diagram illustrates a simple view of this topology with the different network zones and availability zones:

We will address fine-grained access control in the security lab.

As described in the Kafka concept introduction, topic may have partitions. Partitions are used to improve throughput as consumer can run in parallel, and producer can publish to multiple partitions.

The plan set a limit on the total number of partitions.

Each partition records, are persisted in the file system and so the maximum time records are kept on disks is controlled by the maximum retention period and total size. Those Kafka configurations are described in the topic and broker documentation.

Fro the Standard plan, the first page has also a price estimator. The two important concepts used for pricing are the number of partition instances and the number of GB consumed: each consumer reading from a topic/partition will increase the number of byte consumed. The cost is per month.

Creating Event Streams instance with IBM Cloud CLI¶

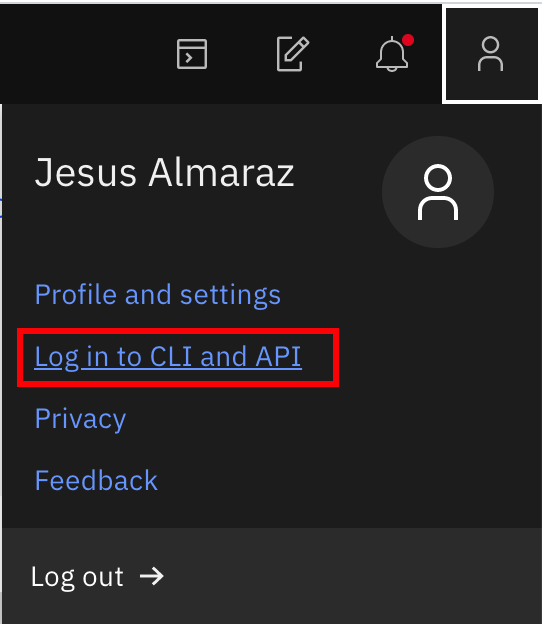

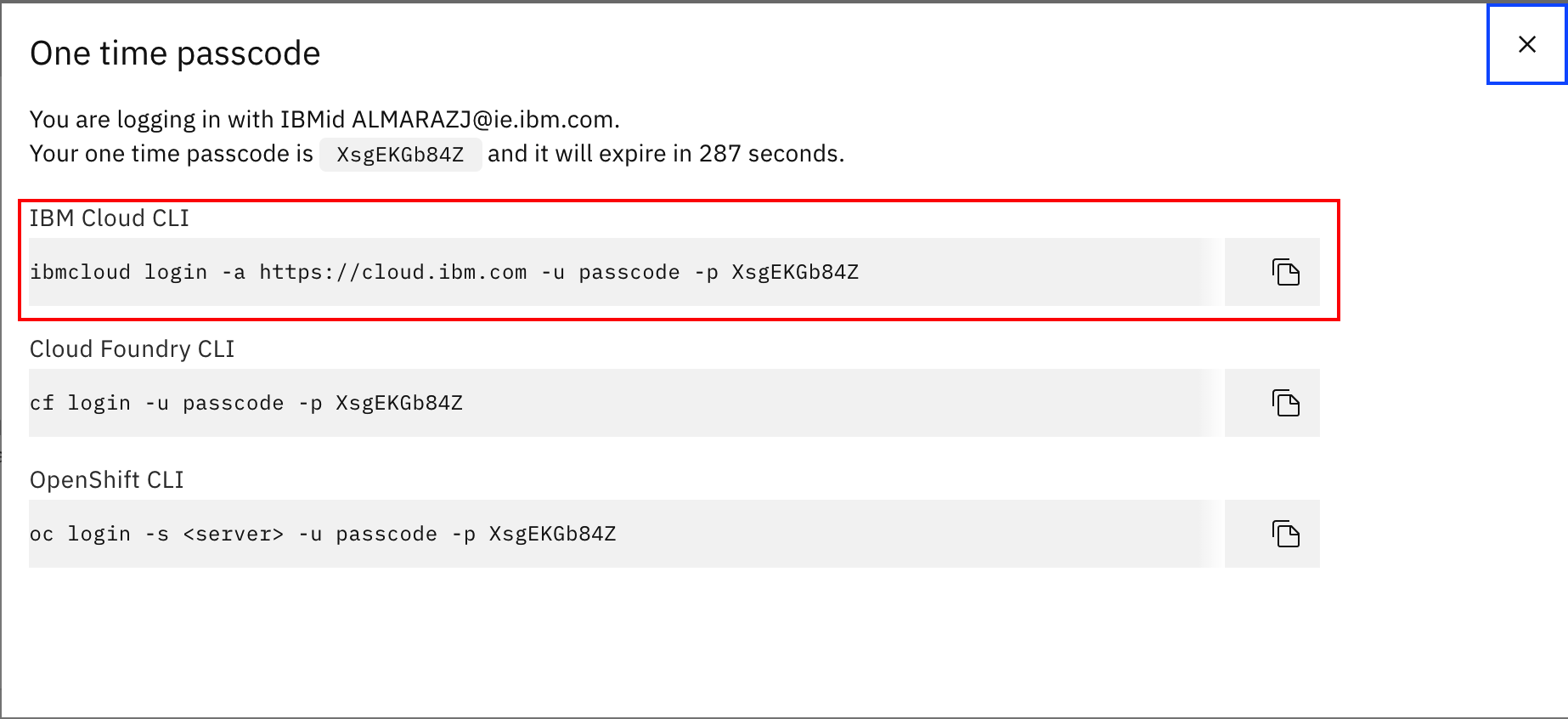

- Go to IBM Cloud and click on the user avatar on the top right corner. Then, click on Log in to CLI and API option:

- Copy the

IBM Cloud CLIlogin command

-

Open a terminal window, paste and execute the command:

$ ibmcloud login -a https://cloud.ibm.com -u passcode -p XsgEKGb84Z API endpoint: https://cloud.ibm.com Authenticating... OK Targeted account bill s Account (b63...) <-> 195... Select a region (or press enter to skip): 1. au-syd 2. in-che 3. jp-osa 4. jp-tok 5. kr-seo 6. eu-de 7. eu-gb 8. us-south 9. us-south-test 10. us-east Enter a number> 6 Targeted region eu-de API endpoint: https://cloud.ibm.com Region: eu-de User: A<> Account: Bill s Account (b63...) <-> 195... Resource group: No resource group targeted, use ibmcloud target -g RESOURCE_GROUP CF API endpoint: Org: Space: -

List your services with

ibmcloud resource service-instancesand make sure your IBM Event Streams instance is listed:$ ibmcloud resource service-instances Retrieving instances with type service_instance in all resource groups in all locations under account Kedar Kulkarni's Account as ALMARAZJ@ie.ibm.com... OK Name Location State Type IBM Cloud Monitoring with Sysdig-rgd us-south active service_instance apikey for simple toolchain us-east active service_instance aapoc-event-streams us-south active service_instance Event Streams-wn eu-de active service_instanceWe can see our instance called: Event Streams-wn

-

Create an Event Streams instance using CLI

-

List your IBM Event Streams instance details with

ibmcloud resource service-instance <instance_name>:Mind the$ ibmcloud resource service-instance Event\ Streams-wn Retrieving service instance Event Streams-wn in all resource groups under account Kedar Kulkarni's Account as ALMARAZJ@ie.ibm.com... OK Name: Event Streams-wn ID: crn:v1:bluemix:public:messagehub:eu-de:a/b636d1d8...cfa:b05be9...2e687a:: GUID: b05be932...e687a Location: eu-de Service Name: messagehub Service Plan Name: enterprise-3nodes-2tb Resource Group Name: State: active Type: service_instance Sub Type: Created at: 2020-05-11T15:54:48Z Created by: bob.the.builder@someemail.com Updated at: 2020-05-11T16:49:18Z Last Operation: Status sync succeeded Message Synchronized the instance\character in your IBM Event Streams instance. -

Initialize your IBM Event Streams plugin for the IBM Cloud CLI with

ibmcloud es init: -

Check all the CLI commands available to you to manage and interact with your IBM Event Streams instance with

$ ibmcloud es:$ ibmcloud es NAME: ibmcloud es - Plugin for IBM Event Streams (build 1908221834) USAGE: ibmcloud es command [arguments...] [command options] COMMANDS: broker Display details of a broker. broker-config Display broker configuration. cluster Display details of the cluster. group Display details of a consumer group. group-delete Delete a consumer group. group-reset Reset the offsets for a consumer group. groups List the consumer groups. init Initialize the IBM Event Streams plugin. topic Display details of a topic. topic-create Create a new topic. topic-delete Delete a topic. topic-delete-records Delete records from a topic before a given offset. topic-partitions-set Set the partitions for a topic. topic-update Update the configuration for a topic. topics List the topics. help, h Show help Enter 'ibmcloud es help [command]' for more information about a command. -

List your cluster configuration with

$ ibmcloud es cluster:1. Looking at broker details:$ ibmcloud es cluster Details for cluster Cluster ID Controller mh-tcqsppdpzlrkdmkbgmgl-4c20...361c6f175-0000 0 Details for brokers ID Host Port Rack 0 kafka-0.mh-tcqsppdpzlrkdmkbgmgl-4c201a12d......22e361c6f175-0000.eu-de.containers.appdomain.cloud 9093 fra05 1 kafka-1.mh-tcqsppdpzlrkdmkbgmgl-4c201a12d......22e361c6f175-0000.eu-de.containers.appdomain.cloud 9093 fra02 2 kafka-2.mh-tcqsppdpzlrkdmkbgmgl-4c201a12d......22e361c6f175-0000.eu-de.containers.appdomain.cloud 9093 fra04 No cluster-wide dynamic configurations found.ibmcloud es broker 0:ibmcloud es broker 0 Details for broker ID Host Port Rack 0 broker-0-t19zgvnykgdqy1zl.kafka.svc02.us-south.eventstreams.cloud.ibm.com 9093 dal10 Details for broker configuration Name Value Sensitive? broker.id 0 false broker.rack dal10 false advertised.listeners SASL_EXTERNAL://broker-0-t19zgvnykgdqy1zl.kafka.svc02.us-south.eventstreams.cloud.ibm.com:9093 false OK -

Get detail view of a broker configuration:

ibmcloud es broker-config 0

We will see other CLI commands in future labs.

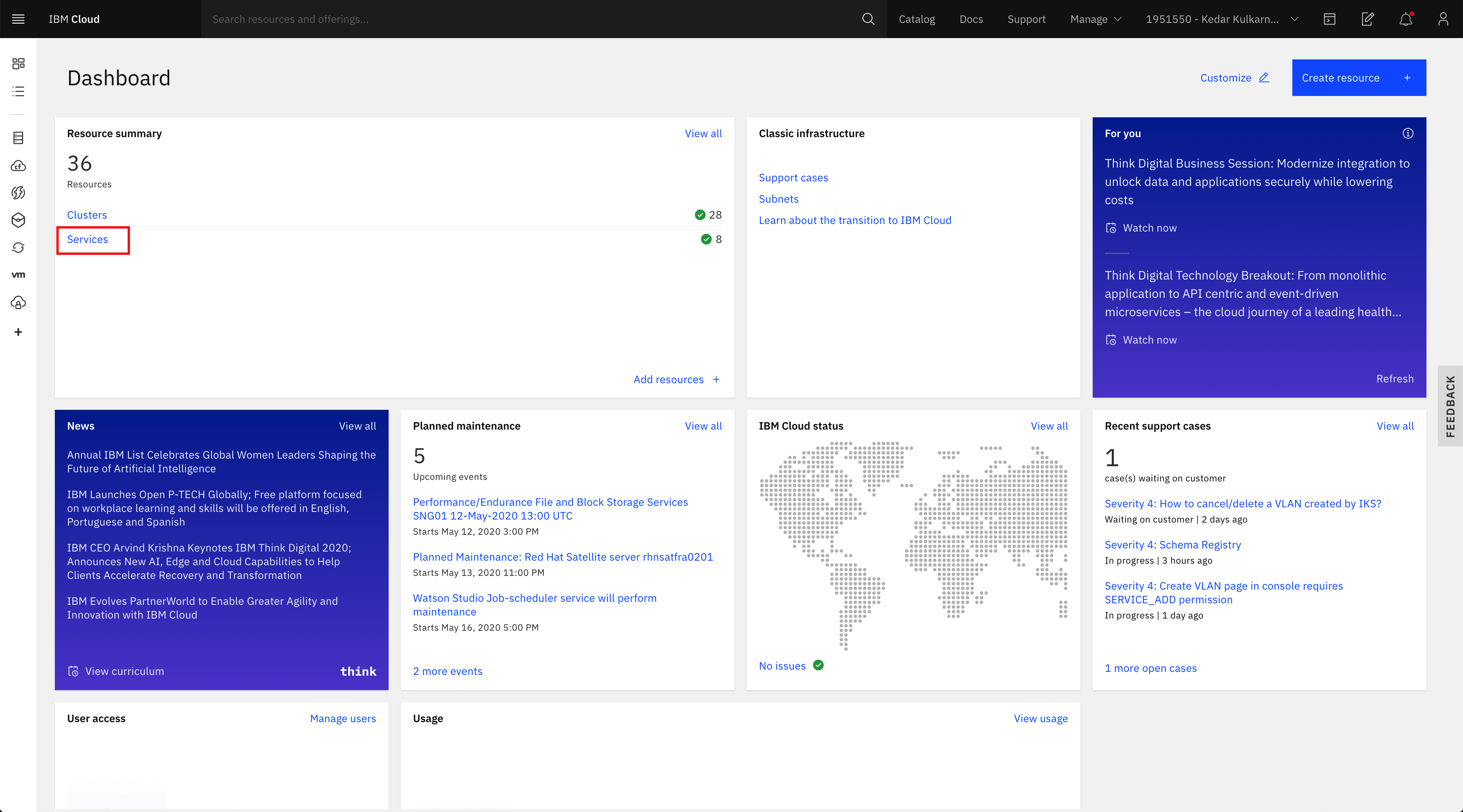

Coming back another time¶

When coming back to the IBM Cloud dashboard the simplest way to find the Event Streams service is to go to the Services:

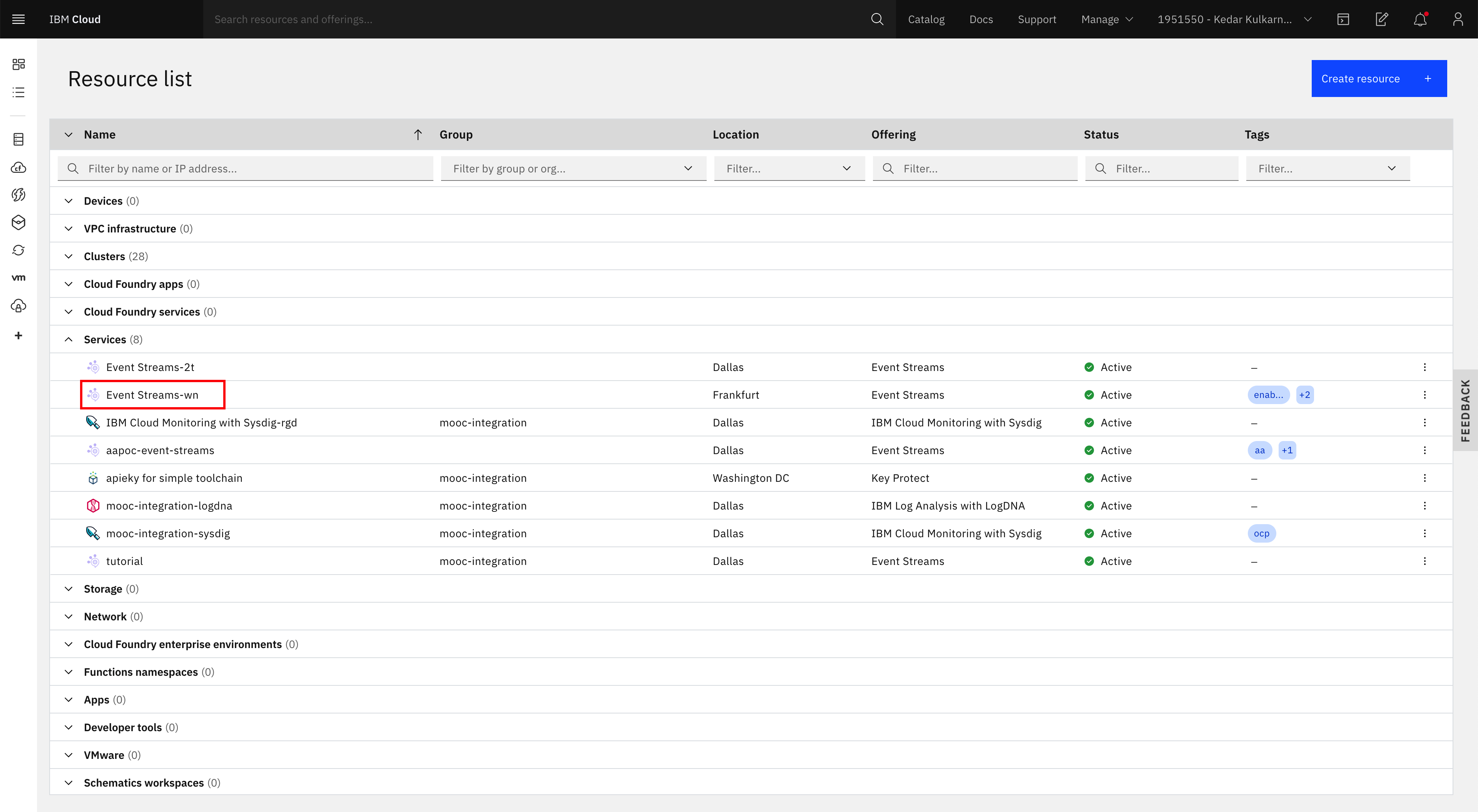

- Click on your IBM Event Streams instance:

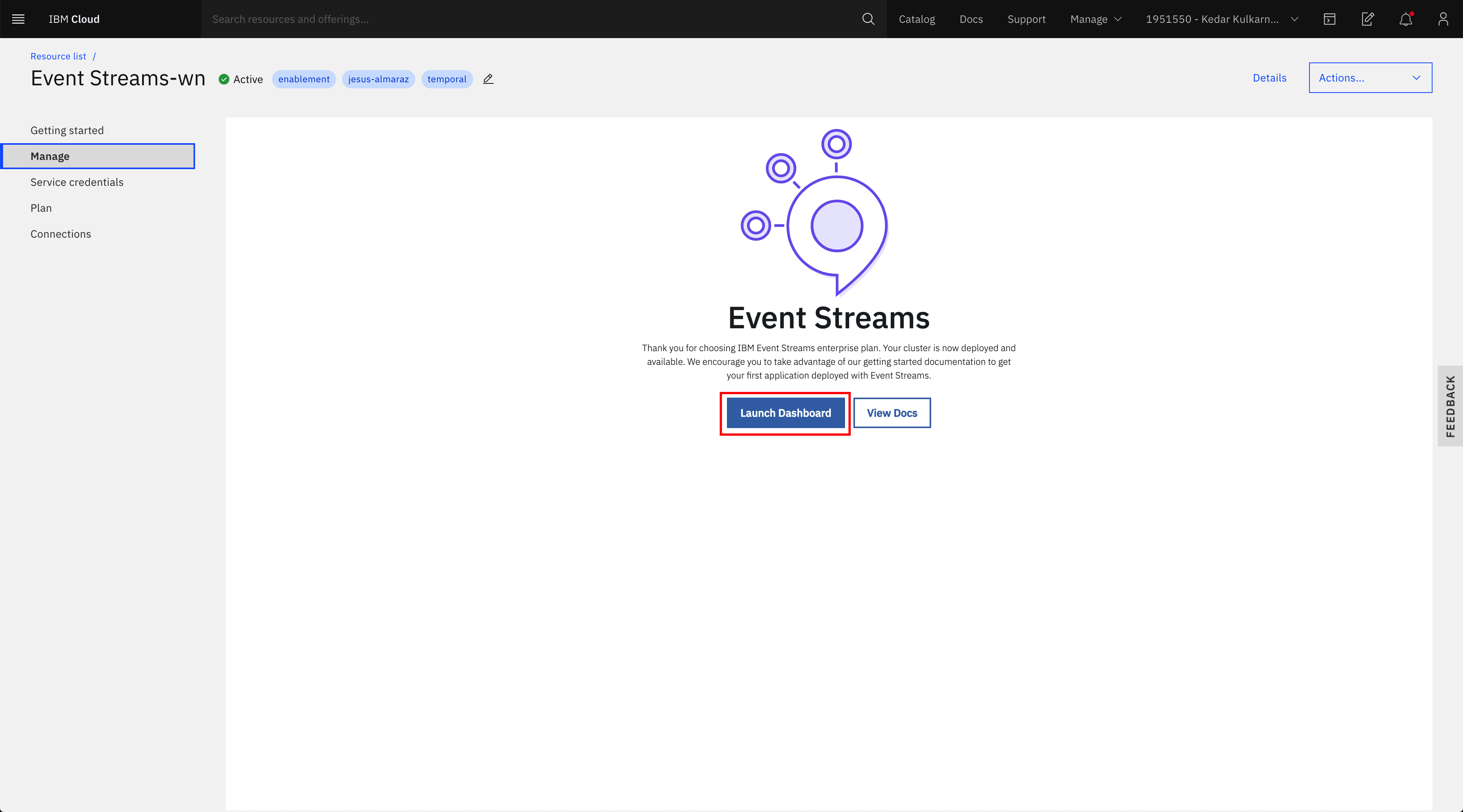

- Click on Launch Dashboard button to open the IBM Event Streams dashboard

Main Event Streams Dashboard page¶

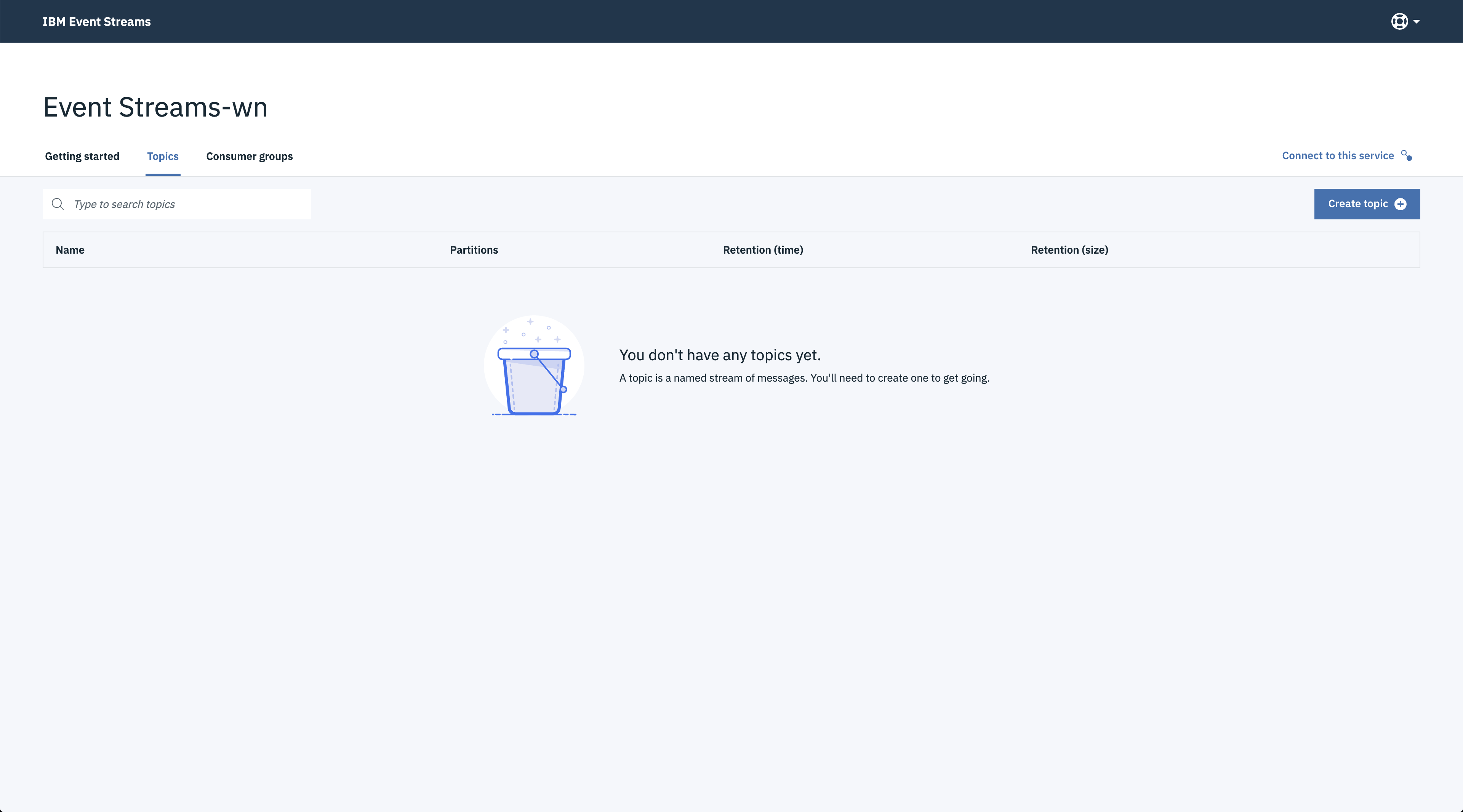

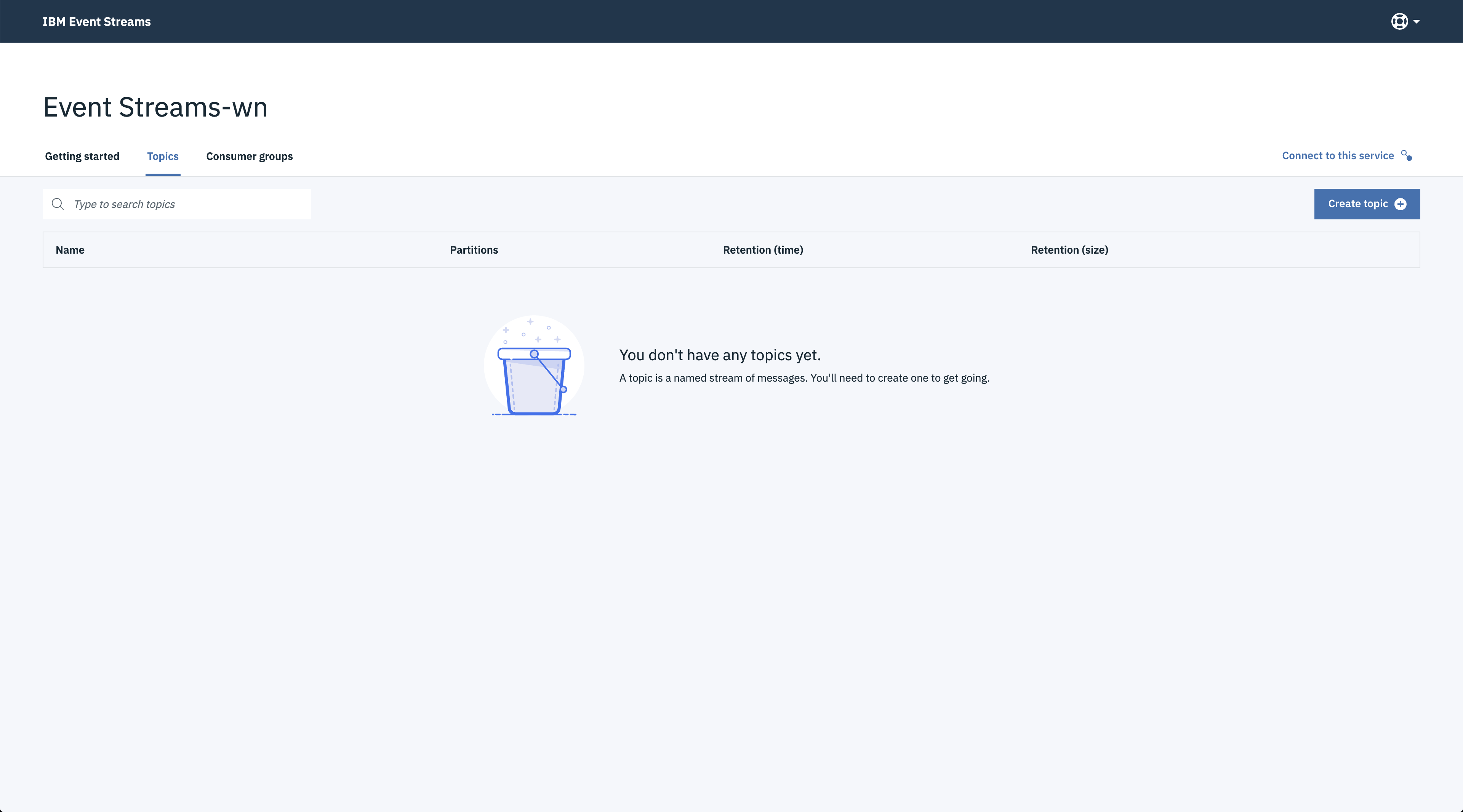

Once the instance is created, or when you come back to the service, you reach the managepanel, as illustrated in previous figure.

From the Dashboard we can access the Topics and Consumer groups panels.

Create topic¶

In this section we are going to see how to create, list and delete topics both using the User Interface and then the IBM Event Streams CLI.

-

Open the IBM Event Streams user interface (go into your IBM Event Streams service within your IBM Cloud portal and click on the launch dashboard button). Once there, click on the Topics tab from the top menu:

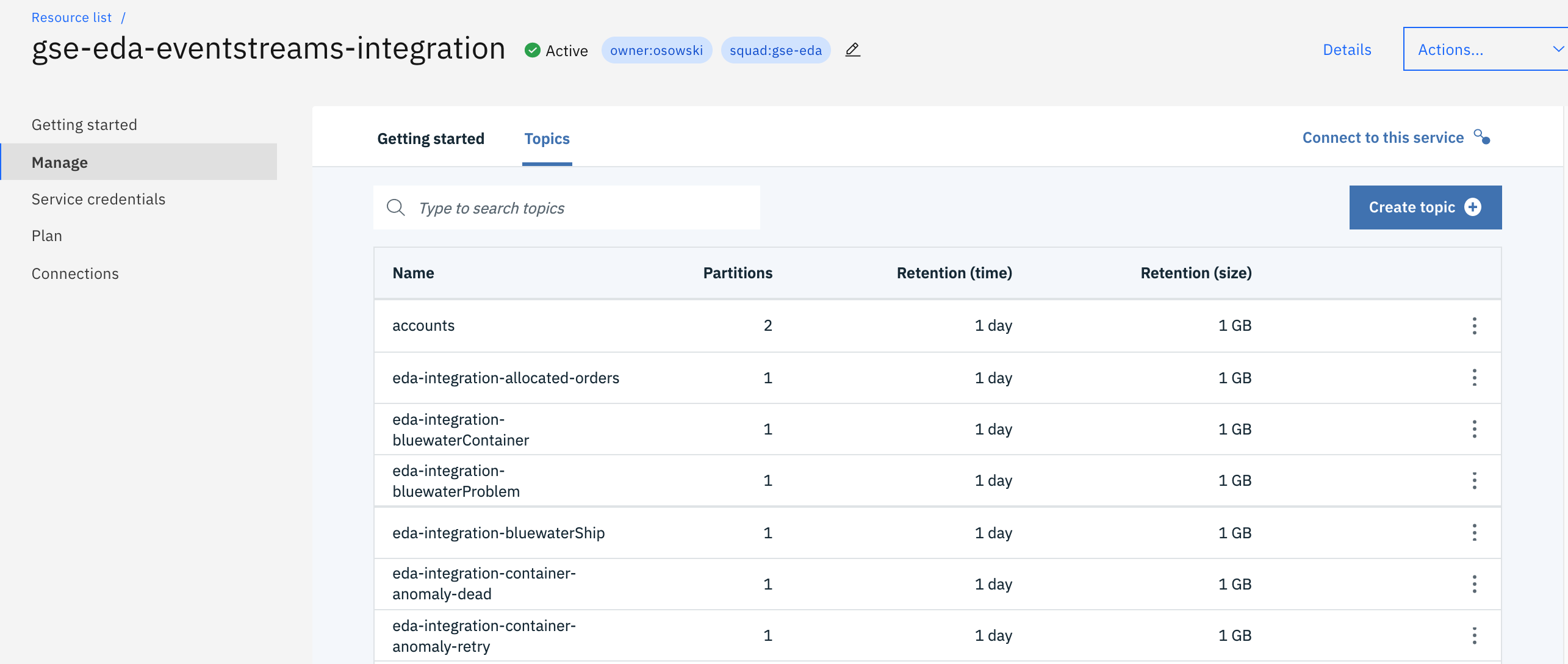

Let create a demo-topic-ui topic. If you need to revisit the topic concepts, you can read this note. When you go to the topics view you get the list of existing topics.

From this list an administrator can delete an existing topic or create new one.

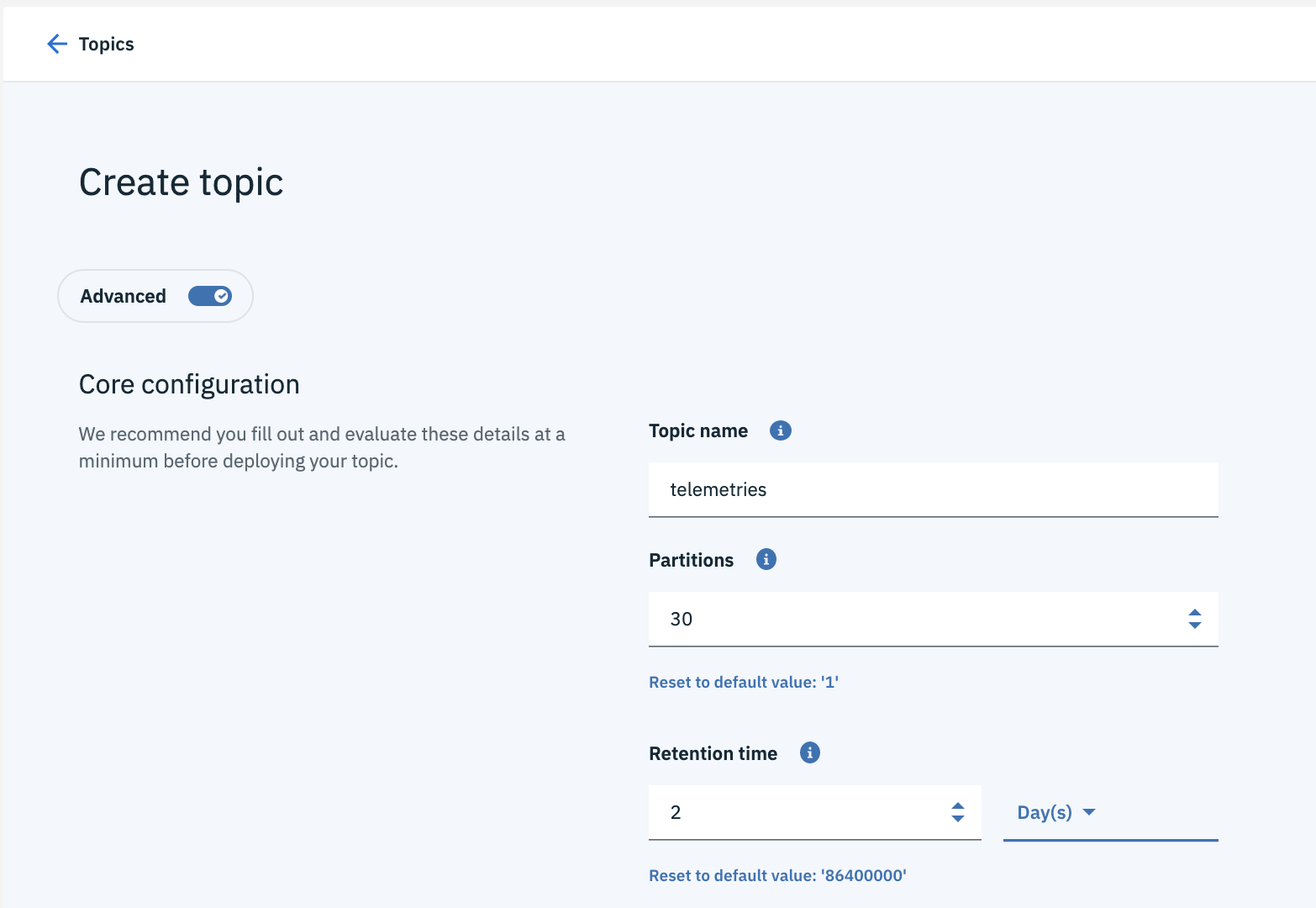

- The 'create topic' button leads to the step by step process.

- Switch to the Advanced mode to get access to the complete set of parameters. The first panel is here to define the core configuration

Some parameters to understand:

- Number of partitions: the default value should be 1. If the data can be partitioned without loosing semantic, you can increase the number of partitions.

- Retention time: This is how long messages are retained before they are deleted to free up space. If your messages are not read by a consumer within this time, they will be missed. It is mapped to the retention.ms kafka topic configuration.

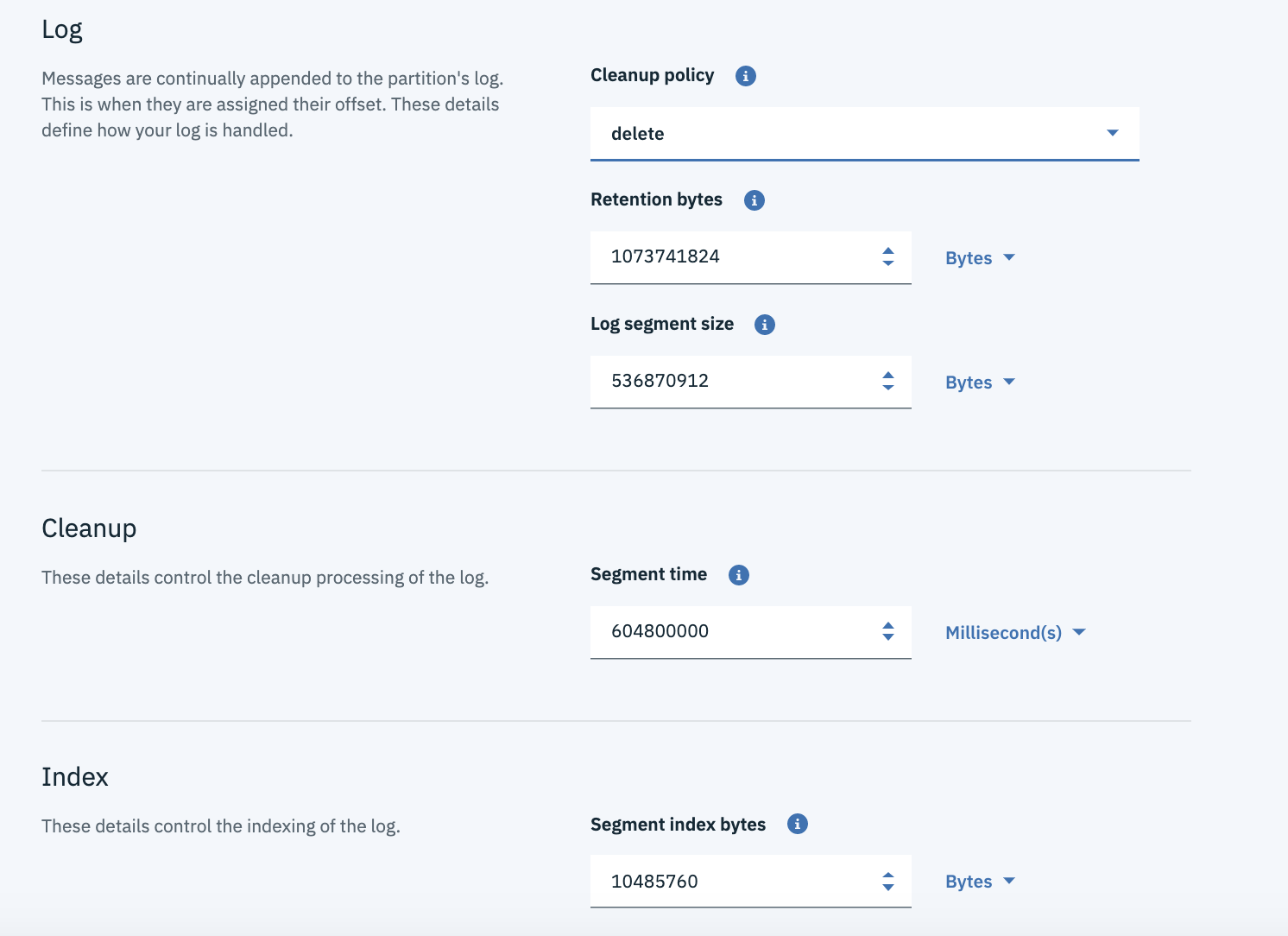

The bottom part of the configuration page, includes logs, cleanup and indexing.

-

The partition's log parameter section includes a cleanup policy that could be:

- delete: discard old segments when their retention time or size limit has been reached

- compact: retain at least the last known value for each message key within the log of data for a single topic partition. The topic looks like a table in DB.

- compact, delete: compact the log and remove old records

-

retention bytes: represents the maximum size a partition (which consists of log segments) can grow to, before old log segments will be discarded to free up space.

- log segment size is the maximum size in bytes of a single log file.

- Cleanup segment time - segment.ms controls the period of time after which Kafka will force the log to roll, even if the segment file isn't full, this is to ensure that retention can delete or compact old data.

- Index - segment.index.bytescontrols the size of the index that maps offsets to file positions.

The log cleaner policy is supported by a log cleaner, which are threads that recopy log segment files, removing records whose key appears in the head of the log.

The number of replications is set to three with a min-in-sync replicas of two.

-

We can now see our new topic:

-

To delete a topic, click on the topic options button at the right end of a topic, click on Delete this topic and then on the Delete button in the confirmation pop-up window:

-

The topic should now be deleted:

Create topic with CLI¶

-

List your topics with

1. Create a topic: (Default 1 partition - 3 replicas) * Execute$ ibmcloud es topics:$ ibmcloud es topic-create --helpfor more further configuration of your topic creation -

List topics:

-

Display details of a topic:

$ ibmcloud es topic demo-topic Details for topic demo-topic Topic name Internal? Partition count Replication factor demo-topic false 1 3 Partition details for topic demo-topic Partition ID Leader Replicas In-sync 0 2 [2 1 0] [2 1 0] Configuration parameters for topic demo-topic Name Value cleanup.policy delete min.insync.replicas 2 segment.bytes 536870912 retention.ms 86400000 retention.bytes 1073741824 OK -

Delete records in a topic (in the command below, we want to delete record on a partition 0 offset 5 and partition 1 from offset 0):

-

Add partitions to an existing topic, by setting the new target number of partition:

-

Delete a topic:

-

List topics:

For the last list of commands see the CLI Reference manual.

Getting started applications¶

From the manage dashboard we can download a getting started application that has two processes: one consumer and one producer, or we can use a second application that we have in this repository

Using the Event Streams on cloud getting started app¶

To be able to build the code you need to get gradle installed or use the docker image:

docker run --rm -u gradle -v "$PWD":/home/gradle/project -w /home/gradle/project gradle gradle <gradle-task>

The instructions are in this documentation and can be summarized as:

- Clone the github repository:

- Build the code:

Using the gradle CLI

or the gradle docker image

docker run --rm -u gradle -v "$PWD":/home/gradle/project -w /home/gradle/project gradle gradle build

- Start consumer

java -jar ./build/libs/kafka-java-console-sample-2.0.jar broker-0-qnprtqnp7hnkssdz.kafka.svc01.us-east.eventstreams.cloud.ibm.com:9093,broker-1-qnprtqnp7hnkssdz.kafka.svc01.us-east.eventstreams.cloud.ibm.com:9093,broker-2-qnprtqnp7hnkssdz.kafka.svc01.us-east.eventstreams.cloud.ibm.com:9093 am_rbb9e794mMwhE-KGPYo0hhW3h91e28OhT8IlruFe5 -consumer

- Start producer

java -jar ./build/libs/kafka-java-console-sample-2.0.jar broker-0-qnprtqnp7hnkssdz.kafka.svc01.us-east.eventstreams.cloud.ibm.com:9093,broker-1-qnprtqnp7hnkssdz.kafka.svc01.us-east.eventstreams.cloud.ibm.com:9093,broker-2-qnprtqnp7hnkssdz.kafka.svc01.us-east.eventstreams.cloud.ibm.com:9093 am_rbb9e794mMwhE-KGPYo0hhW3h91e28OhT8IlruFe5 -producer