Mirror Maker 2¶

Active/Passive Mirroring¶

Overview¶

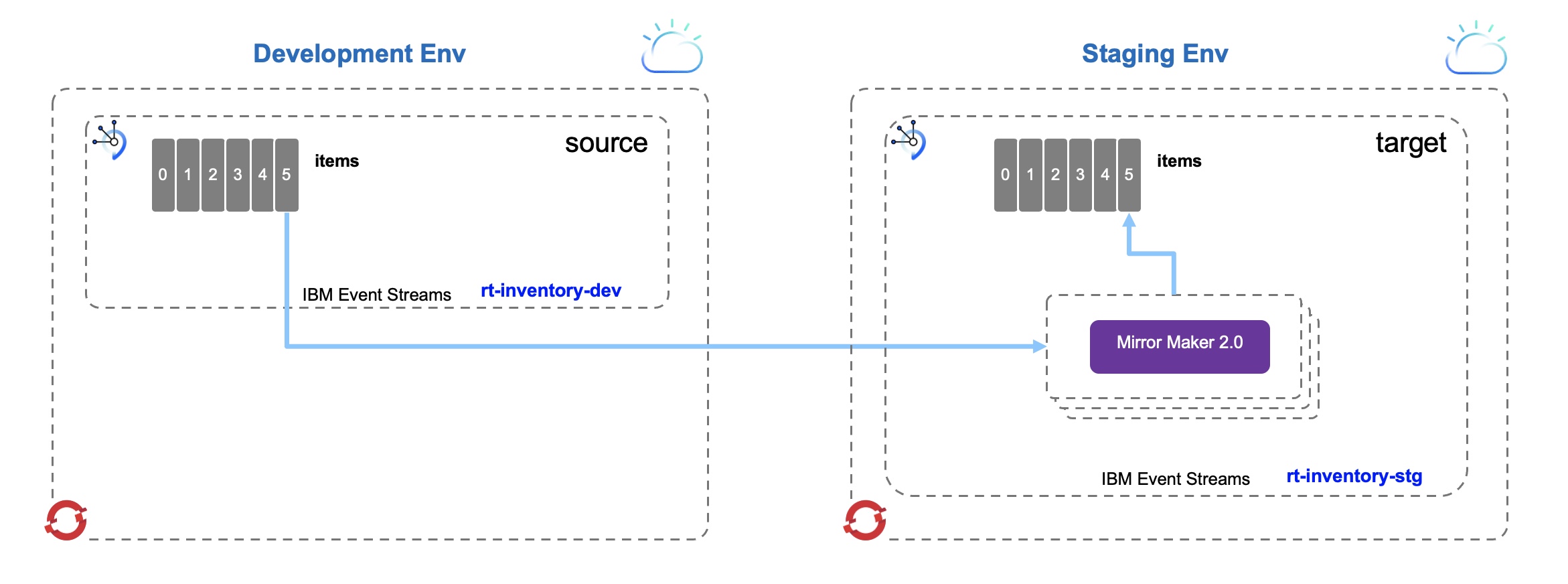

This demo presents how to leverage Mirror Maker 2 between two Kafka clusters running on OpenShift. It uses two IBM Event Streams instances on both sides and utilizes mirroring feature that ships as part of IBM Event Streams Operator API's.

- Cluster 1 (Active): This will be our source cluster (Source). In this case it can be a Development environment where consumers and producers are connected.

- Cluster 2 (Passive): This will be our target cluster (Target). In this case it can be a Staging environment where no consumers or producers are connected.

Upon failover, consumers will be connected to the newly promoted cluster (Cluster 2) which will become active.

Mirror Maker 2 is a continuous background mirroring process and can be run in its own namespace. However, for the purposes of this demo, it will be created within the destination namespace, in our case rt-inventory-stg and connect to the source Kafka cluser, in our case it is in rt-inventory-dev namespace.

Pre-requisites¶

- We assume, you have access to one or two separate OpenShift clusters.

- The OpenShift cluster(s) has/have IBM Event Streams Operator version 3.0.x installed and available for all namespaces on the cluster(s).

Steps¶

1- Creating Source (Origin) Kafka Cluster:

- Using Web Console on the first OpenShift cluster, create a new project named

rt-inventory-dev - Make sure that this project is selected and create a new EventStreams instance named

rt-inventory-devinside it.

Note

This cluster represents the Development Environment (Active)

-

Once the EventStreams instance

rt-inventory-devis up and running, access its Admin UI to perform the below sub-tasks:- Create SCRAM credentials with username

rt-inv-dev-user. This will creatert-inv-dev-usersecret. - Take a note of the SCRAM username and password.

- Take a note of the bootstrap Server address.

- Generate TLS certificates. This will create

rt-inventory-dev-cluster-ca-certsecret. - Download and save the PEM certificate. You could rename it to

es-src-cert.pem. - Create Kafka topics

items,items.inventory, andstore.inventorywhich will be used to demonstrate replication from source to target.

- Create SCRAM credentials with username

Note

The above two created secrets will need to be copied to the target cluster so Mirror Maker 2 can reference them to connect to the source cluster

2- Creating Target (Destination) Kafka Cluster:

- Using Web Console on the first OpenShift cluster, create a new project named

rt-inventory-stg - Make sure that this project is selected and create a new EventStreams instance named

rt-inventory-stginside it.

Note

This cluster represents the Staging Environment (Passive)

-

Once the EventStreams instance

rt-inventory-stgis up and running, access its Admin UI to perform the below sub-tasks:- Create SCRAM credentials with username

rt-inv-stg-user. This will creatert-inv-stg-usersecret. - Take a note of the SCRAM username and password.

- Take a note of the bootstrap Server address.

- Generate TLS certificates. This will create

rt-inventory-stg-cluster-ca-certsecret. - Download and save the PEM certificate. You could rename it to

es-tgt-cert.pem.

- Create SCRAM credentials with username

Note

Mirror Maker 2 will reference the above two created secrets to connect to the target cluster

3- Creating MirrorMaker 2 instance:

- Make sure that

rt-inventory-stgproject is selected. - Create the two sercets generated from Step 1 by copying their yaml configs from

rt-inventory-devproject to preserve the names. - Using OpenShift Web Console (Administrator perspective), navigate to Operators -> Installed Operators.

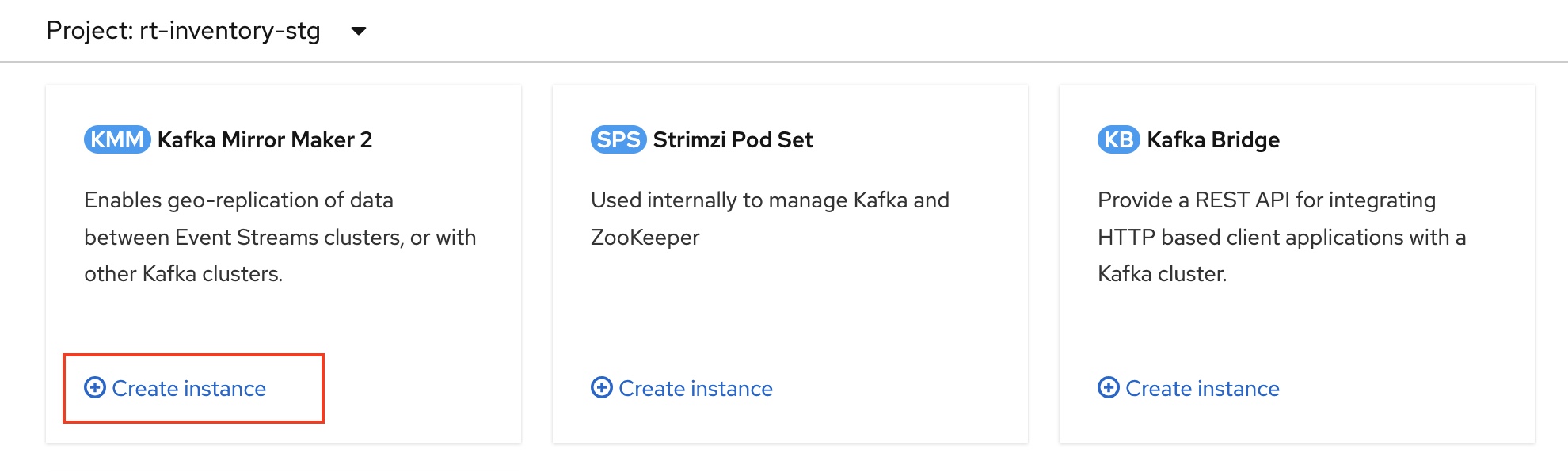

- On the Installed Operators page, click on

IBM Event Sreams. - On the Avialable API's page, find

Kafka Mirror Maker 2then clickCreate instance.

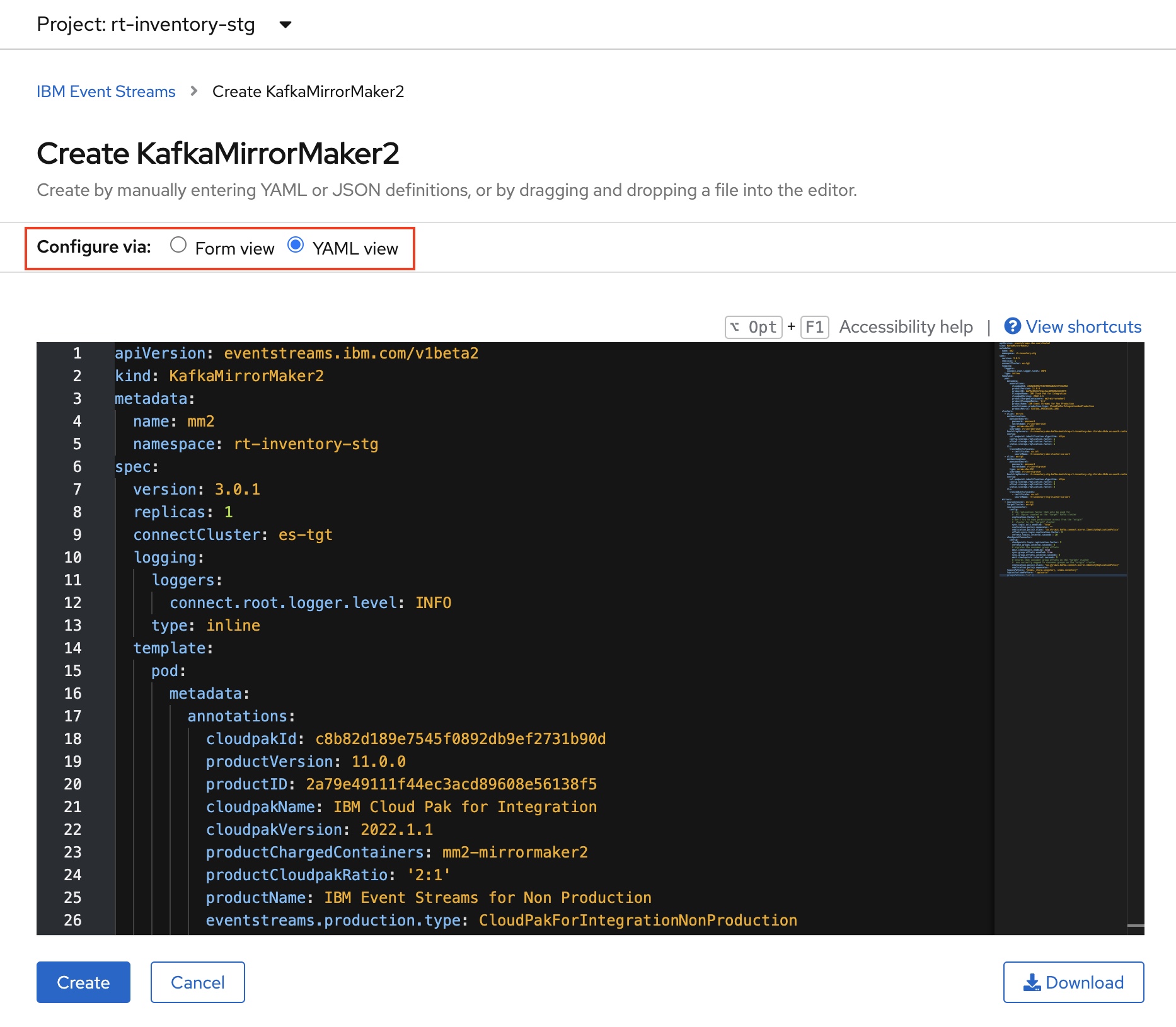

- Select Configure via YAML view to use the yaml file provided in this demo.

- Change the

bootstrapServersaddress (line 36) to be the address of your Source Kafka bootstrapServer (from Step 1). - Change the

bootstrapServersaddress (line 53) to be the address of your Target Kafka bootstrapServer (from Step 2).

- If the same namespaces and SCRAM usernames are used as indicated in the previous steps, no further changes are required.

- Click

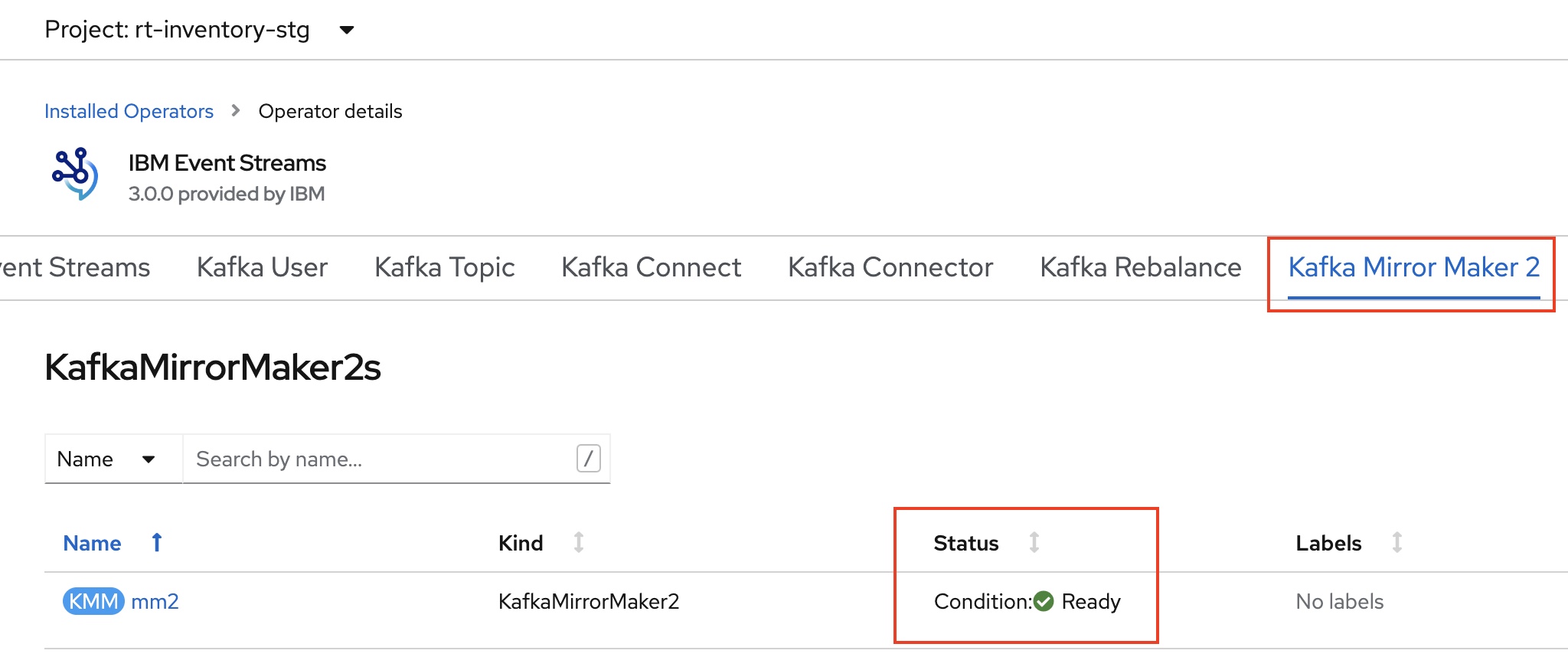

Createto apply the YAML changes and createKafkaMirrorMaker2instance. - In few seconds, check the status of

KafkaMirrorMaker2instance from Kafka Mirror Maker 2 Tab. - The status should be

Condition: Ready.

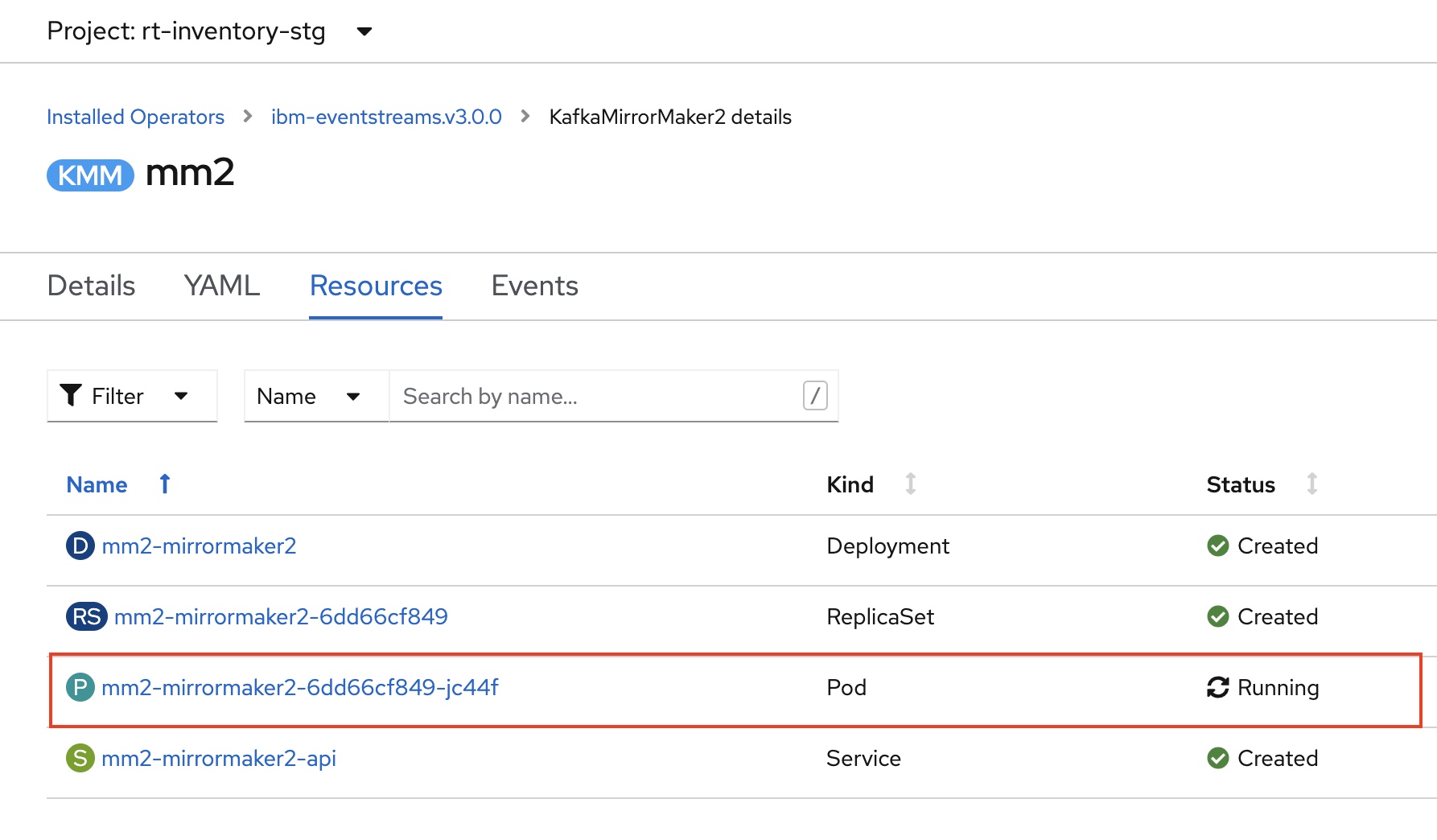

KafkaMirrorMaker2instance createdmm2will deploy different resources that can be checked from itsResourcesTab.- You might need to check the created

Podresource log for errors or warnings.

- Now, the created instance

mm2will start to mirror (replicate) the Kafka topics' events, and offsets from source to the target cluster. - Only Kafka topics specified in

topicsPatternwill be replicated and topics specified intopicsExcludePatternwill be excluded.

Verification¶

- Access the EventStreams instance

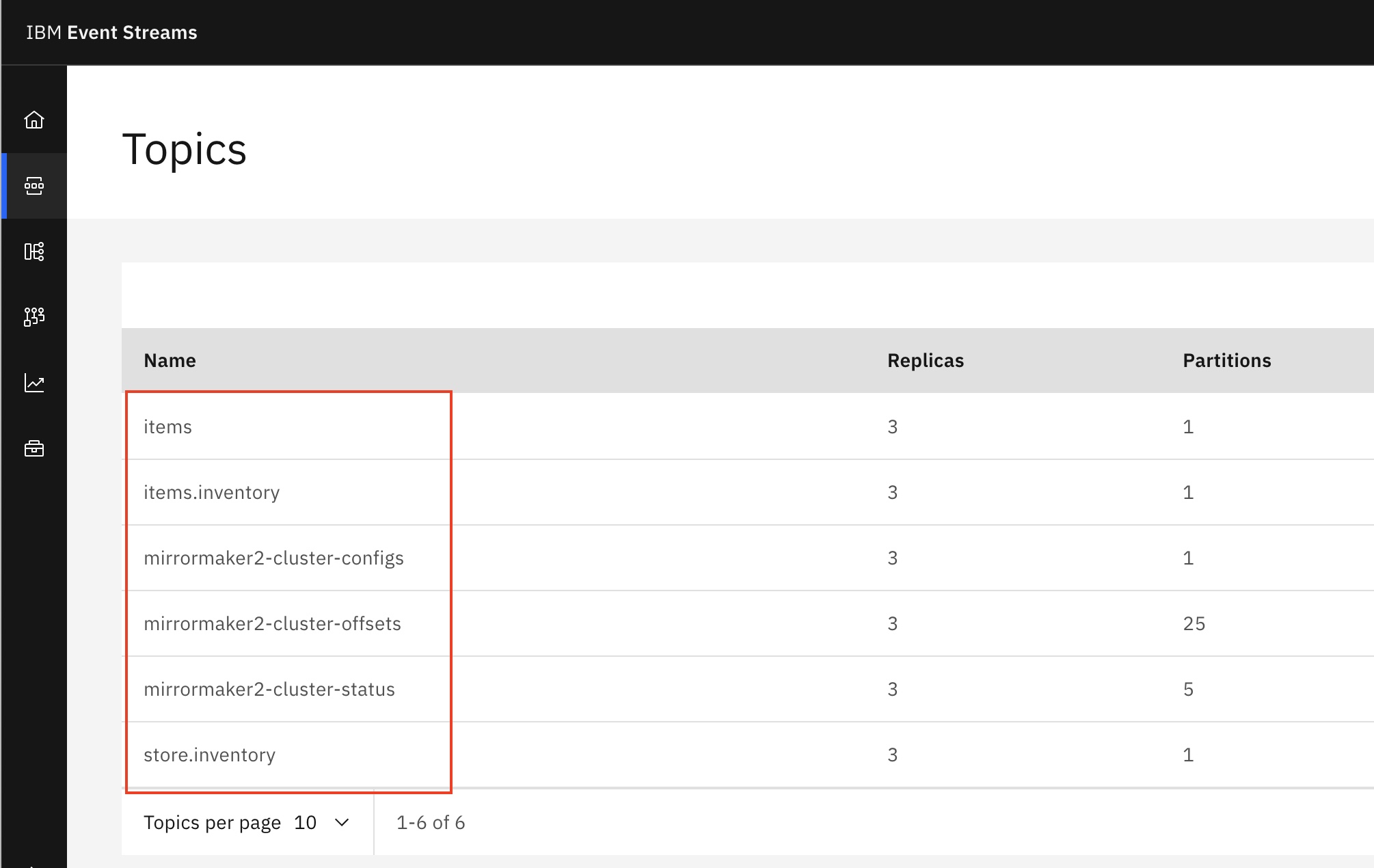

rt-inventory-stgAdmin UI to verify that the replication is working. - From the side menu, select Topics to see the list of Kafka topics created on our Target cluster (Staging Environment).

- You can see that

items,items.inventory, andstore.inventoryKafka topics were created and events are being replicated. - Kafka Topics named

mirrormaker2-cluster-xxxare used internally by ourKafkaMirrorMaker2instance to keep track of configs, offsets, and replication status. - Click on

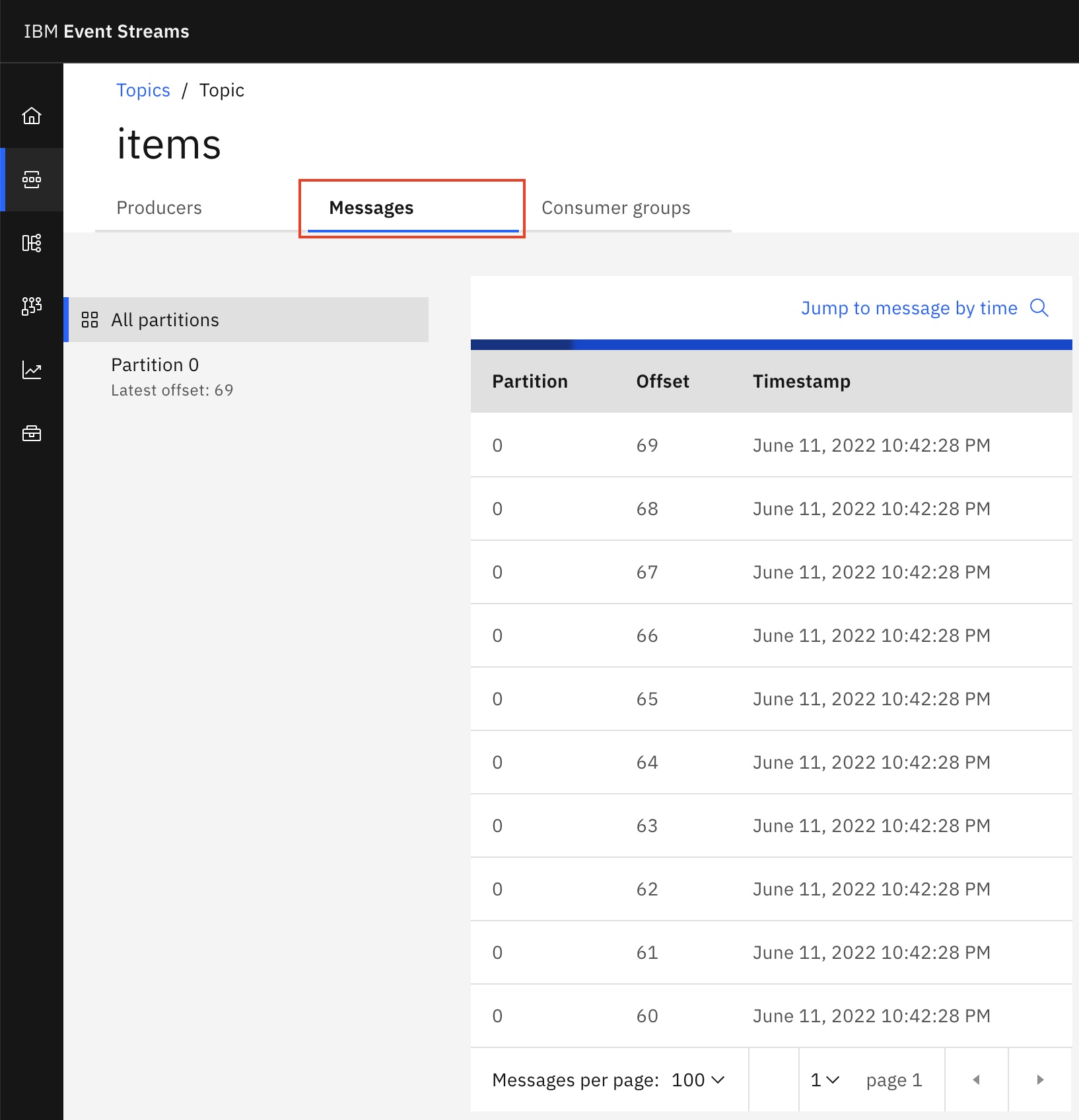

itemstopic then visit the Messages Tab to see that the events are being replicated as they arrive to the Source cluster. The next section will demonstrate how to produce sample events to the Source cluster.

Producing Events (Source)¶

This section will be used to verify that the replication of messages (Kafka events) is actually happening (live events feed is moving from Source to Target). We will use a simple python script (accompanied with a starter bash script) that connects to the Source cluster and sends a random json payloads to the items topic created in Step 1.

The producer script requires Python 3.x with confluent-kafka Kakfa library installed. To install the Kafka library, run:

Perform the following steps to setup the producer sample application:

- On your local machine, create a new directory named

producer. - Download and save

SendItems.pyandsendItems.shfiles insideproducerdirectory. - Move the Source cluster PEM certificate file

es-src-cert.pemto the same directory. - Edit the

sendItems.shscript to set the environment variables of Source cluster connectivity configs.- Change the

KAFKA_BROKERSvariable to the Source cluster bootstrap address. - Change the

KAFKA_PWDvariable to be the password ofrt-inv-dev-userSCRAM user.

- Change the

#!/bin/bash

export KAFKA_BROKERS=rt-inventory-dev-kafka-bootstrap-rt-inventory-dev.itzroks-4b4a.us-south.containers.appdomain.cloud:443

export KAFKA_USER=rt-inv-dev-user

export KAFKA_PWD=SCRAM-PASSWORD

export KAFKA_CERT=es-src-cert.pem

export KAFKA_SECURITY_PROTOCOL=SASL_SSL

export KAFKA_SASL_MECHANISM=SCRAM-SHA-512

export SOURCE_TOPIC=items

python SendItems.py $1

Consuming Events (Target)¶

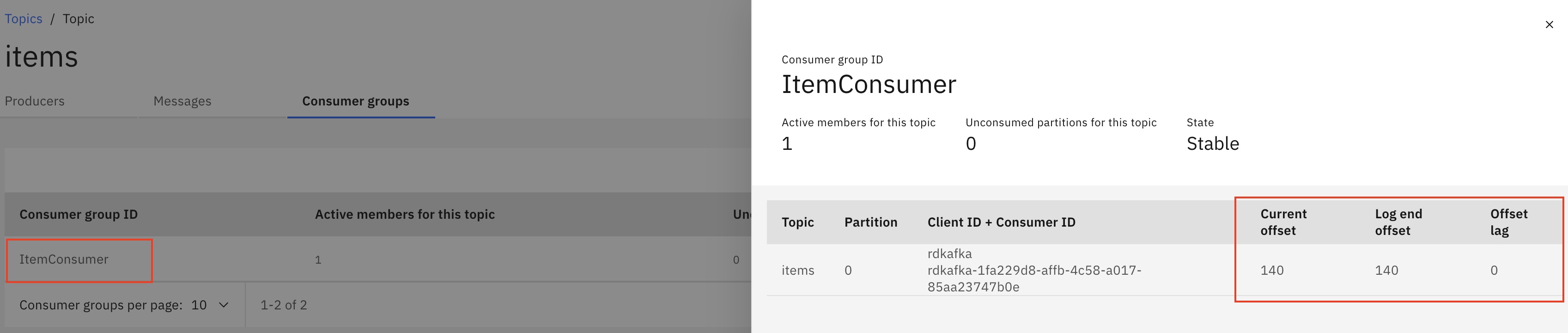

This section will simulate failover to Target (passive) cluster. MirrorMaker 2 uses checkpointConnector to automatically store consumer group offset checkpoints for consumer groups on the Source Kafka cluster. Each checkpoint maps the last committed offset for each consumer group in the Source cluster to the equivalent offset in the Target cluster.

These checkpoints will be used by our Kafka consumer script to start consuming from an offset on the Target cluster that is equivalent to the last committed offset on the Source cluster.

The same way we used to setup the Producer application, we need to perform the following steps to setup the Consumer application:

- On your local machine, create a new directory named

consumer. - Download and save

ReceiveItems.pyandreceiveItems.shfiles insideconsumerdirectory. - Move the Target cluster PEM certificate file

es-tgt-cert.pemto the same directory. - Edit the

receiveItems.shscript to set the environment variables of Target cluster connectivity configs.- Change the

KAFKA_BROKERSvariable to the Target cluster bootstrap address. - Change the

KAFKA_PWDvariable to be the password ofrt-inv-stg-userSCRAM user.

- Change the

#!/bin/bash

export KAFKA_BROKERS=rt-inventory-stg-kafka-bootstrap-rt-inventory-stg.itzroks-4b4b.us-south.containers.appdomain.cloud:443

export KAFKA_USER=rt-inv-stg-user

export KAFKA_PWD=SCRAM-PASSWORD

export KAFKA_CERT=es-tgt-cert.pem

export KAFKA_SECURITY_PROTOCOL=SASL_SSL

export KAFKA_SASL_MECHANISM=SCRAM-SHA-512

export SOURCE_TOPIC=items

python ReceiveItems.py $1

items replicated topic in our Target Kafka cluster. Note

To check the current and offset lag, you can use Event Streams UI on Target cluster.

We can see that the consumer application has started to read from the last committed offset.

This concludes our Kafka Mirror Maker 2 Active/Passive mode replication demo.